Monitoring: различия между версиями

Sirmax (обсуждение | вклад) (→Debug) |

Sirmax (обсуждение | вклад) |

||

| (не показано 58 промежуточных версий этого же участника) | |||

| Строка 1: | Строка 1: | ||

| + | [[Категория:Collectd]] |

||

| + | [[Категория:Heka]] |

||

| + | [[Категория:LMA]] |

||

| + | [[Категория:MOS FUEL]] |

||

| + | [[Категория:Linux]] |

||

| + | [[Категория:Monitoring]] |

||

=Monitorimg= |

=Monitorimg= |

||

| + | Any complicated system need to be monitored. |

||

| − | ==Collectd== |

||

| + | LMA (Logging Monitoring Alerting) Fuel Plugins provides complex logging, monitoring |

||

| − | |||

| + | and alerting system for Mirantis Openstack. LMA use open-source products and can be also integrated with currently existing monitoring systems. |

||

| − | Collectd is simple data collector, use plugins to collect data and output plugins to send tada to another tool (heka in our confuration)<BR> |

||

| + | <BR> |

||

| − | Collectd is collecting following metrics (compute node, simple cluster): |

||

| + | In this manual will be described complex configuration include 4 plugins: |

||

| + | * ElasticSearch/Kibana – Log Search, Filtration and Analysis |

||

| + | * LMA Collector – LMA Toolchain Data Aggregation Client |

||

| + | * InfluxDB/Grafana – Time-Series Event Recording and Analysis |

||

| + | * LMA Nagios – Alerting for LMA Infrastructure |

||

| + | (more details about MOS fuel plugins: [https://www.mirantis.com/products/openstack-drivers-and-plugins/fuel-plugins/) MOS Plugins overview] |

||

| + | <BR> |

||

| + | It is possible to use LMA-related plugins separately but the are designed to be used in complex. In this document all examples are from cloud where all 4 plugins are installed and configured. |

||

| − | == |

+ | ==LMA DataFlow== |

| + | LMA is complex system and contains the follwing parts: |

||

| − | Please see plugin details on collectd man page: https://collectd.org/documentation/manpages/collectd.conf.5.shtml# |

||

| − | * cpu (CPU usage) |

||

| − | * df (disk usage/free size) |

||

| − | * disk (disk usage/IOPS) |

||

| − | * interface (interfece usage/bytes sent and received ) |

||

| − | * load (Linux LA) |

||

| − | * memory (memory usage) |

||

| − | * processes (detailed monitoring of collect and hekad) |

||

| − | * swap (swap usage)] |

||

| + | * [http://collectd.org/ Collectd] - data collecting |

||

| − | ===Output=== |

||

| + | * [http://hekad.readthedocs.org Heka] - data collecting and aggregation |

||

| − | Collectd saves all data in rrd files and sends it to heka using write_http plugin )https://collectd.org/documentation/manpages/collectd.conf.5.shtml#plugin_write_http). It sends data in JSON format to local hekad <B>(BTW Why do we use local heka on each node?) </B> |

||

| + | * [https://influxdata.com/ InluxDB] - non-SQL database for time-based data, in LMA it is is used for charts in Grafana |

||

| − | <BR>Plugin configuration: |

||

| + | * [https://www.elastic.co/ ElasticSearch] - non-SQL database, in LMA it is used to save logs. |

||

| + | * [http://grafana.org/ Grafana] - Graph and dashboard builder |

||

| + | * [https://www.elastic.co/products/kibana Kibana] - Analytics and visualization platform |

||

| + | * [https://www.nagios.org/ Nagios] - Monitoring system |

||

<PRE> |

<PRE> |

||

| + | +--------------------------------+ Grafana dashboard Kibana Dashboard |

||

| − | <LoadPlugin write_http> |

||

| + | |Node N (compute or controller) | ------------------- ---------------------- |

||

| − | Globals false |

||

| + | | | ^ ^ |

||

| − | </LoadPlugin> |

||

| + | |* collectd --->---+ | | | |

||

| + | | | | +-<- Some data generated locally is looped to-<+ | | |

||

| + | | | | | to be aggregated | | | |

||

| + | |* hekad <---<---+ | | ^ | | |

||

| + | | | | +-------------------------------------------+ | ^ | |

||

| + | | +------------->To Aggregator|------>| Heka on aggregator (Controller with VIP) |---+------------+ | | |

||

| + | | | | +-------------------------------------------+ | | |

||

| + | | | | |to |to |to | | |

||

| + | | | | |Influx |ElasticSearch |Nagios | | |

||

| + | | +---------------------------|->-------->--- +-------->--|------->------|--->---------->---------->--------------[ InfuxDB ] ^ |

||

| + | | | | | | | |

||

| + | | | | | | | |

||

| + | | +---------------------------|------------------------>--+------->------|--->---------->---------->--------------[ ElasticSearch ]-------------------------------+ |

||

| + | | | | |

||

| + | +--------------------------------+ | |

||

| + | +----------------------------------------[ Nagios ]---> Alerts (e.g. email notifications) |

||

| − | <Plugin "write_http"> |

||

| − | <URL "http://127.0.0.1:8325"> |

||

| − | Format "JSON" |

||

| − | StoreRates true |

||

| − | </URL> |

||

| − | </Plugin> |

||

</PRE> |

</PRE> |

||

| + | |||

| − | Hekad is listen on 127.0.0.1:8325 |

||

| + | ==AFD and GSE== |

||

| + | |||

| + | [http://plugins.mirantis.com/docs/l/m/lma_collector/lma_collector-0.8-0.8.0-1.pdf Aggregator] |

||

| + | ===Overview=== |

||

| + | The process of running alarms in LMA is not centralized (like it is often the case in conventional monitoring systems) but distributed across all the Collectors. |

||

| + | Each Collector is individuallly responsible for monitoring the resources and the services that are deployed on the node and for reporting any anomaly or fault it may have detected to the Aggregator. |

||

| + | <BR> |

||

| + | The anomaly and fault detection logic in LMA is designed more like an “Expert System” in that the Collector and the Aggregator use facts and rules that are executed within the Heka’s stream processing pipeline. |

||

| + | <BR> |

||

| + | |||

| + | The facts are the messages ingested by the Collector into the Heka pipeline. |

||

| + | The rules are either threshold moni- toring alarms or aggregation and correlation rules. |

||

| + | |||

| + | Both are declaratively defined in YAML(tm) files that you can modify. Those rules are executed by a collection of Heka filter plugins written in Lua that are organised according to a configurable processing workflow. |

||

| + | |||

| + | We call these plugins the AFD plugins for Anomaly and Fault Detection plugins and the GSE plugins for Global Status Evaluation plugins. |

||

| + | Both the <B>AFD</B> and <B>GSE</B> plugins in turn create metrics called the AFD metrics and the GSE metrics respectively. |

||

| + | <BR> |

||

| + | The AFD metrics contain information about the health status of a resource like a device, a system component like a filesystem, or service like an API endpoint, at the node level. |

||

| + | Then, those AFD metrics are sent on a regular basis by each Collector to the Aggregator where they can be aggregated and correlated hence the name of aggregator. |

||

| + | <BR> |

||

| + | E.g. |

||

| + | <BR> |

||

| + | The GSE metrics contain information about the health status of a service cluster, like the Nova API endpoints cluster, or the RabbitMQ cluster as well as the clusters of nodes, like the Compute cluster or Controller cluster. |

||

| + | <BR> |

||

| + | The health status of a cluster is inferred by the GSE plugins using aggregation and correlation rules and facts contained in the AFD metrics it receives from the Collectors. |

||

| + | ===Modifying CPU alarm=== |

||

| + | Modification of existing alarm is detailed explained in [http://plugins.mirantis.com/docs/l/m/lma_collector/lma_collector-0.8-0.8.0-1.pdf LMA collector plugin documentation].<BR> |

||

| + | So here is an example with commands, output and explanation. |

||

| + | |||

| + | ====Modify alarm==== |

||

| + | For test we can modify existing cpu alarm. <BR> |

||

| + | To be sure it always be in 'CRITICAL' state we can set cpu_idle > 100%. Of course it is just for demo. |

||

| + | <BR> |

||

| + | So in <B>/etc/hiera/override/alarming.yaml</B> file we replace cpu idle threshold with 150 with mean '150% of cpu idle'. |

||

<PRE> |

<PRE> |

||

| + | lma_collector: |

||

| − | # netstat -ntpl | grep 8325 |

||

| + | alarms: |

||

| − | tcp 0 0 127.0.0.1:8325 0.0.0.0:* LISTEN 15368/hekad |

||

| + | - name: 'cpu-critical-controller' |

||

| + | description: 'The CPU usage is too high (controller node).' |

||

| + | severity: 'critical' |

||

| + | enabled: 'true' |

||

| + | trigger: |

||

| + | logical_operator: 'or' |

||

| + | rules: |

||

| + | - metric: cpu_idle |

||

| + | relational_operator: '<=' |

||

| + | threshold: 150 |

||

| + | window: 120 |

||

| + | periods: 0 |

||

| + | function: avg |

||

| + | <SKIP> |

||

</PRE> |

</PRE> |

||

| + | Next we need to run puppet to rebuild lma configuration. |

||

| − | ====Chain==== |

||

| − | Details: https://collectd.org/documentation/manpages/collectd.conf.5.shtml#filter_configuration |

||

| − | <BR> |

||

<PRE> |

<PRE> |

||

| + | puppet apply --modulepath=/etc/fuel/plugins/lma_collector-0.8/puppet/modules/ /etc/fuel/plugins/lma_collector-0.8/puppet/manifests/configure_afd_filters.pp |

||

| − | <Chain "PostCache"> |

||

| − | <Rule> |

||

| − | <Match "regex"> |

||

| − | Plugin "^pacemaker_resource$" |

||

| − | TypeInstance "^vip__management$" |

||

| − | </Match> |

||

| − | <Target "notification"> |

||

| − | Message "{\"resource\":\"%{type_instance}\",\"value\":%{ds:value}}" |

||

| − | Severity "OKAY" |

||

| − | </Target> |

||

| − | </Rule> |

||

| − | Target "write" |

||

| − | </Chain> |

||

</PRE> |

</PRE> |

||

| + | Heka need to be restarted so please check heka's start time: |

||

| + | <PRE> |

||

| + | ps -auxfw | grep heka |

||

| + | ... |

||

| + | heka 22518 4.5 4.4 809992 134068 pts/25 Sl+ 12:02 6:30 \_ hekad -config /etc/lma_collector/ |

||

| + | root@node-6:/etc/hiera/override# date |

||

| + | Thu Feb 11 12:04:47 UTC 2016 |

||

| + | </PRE> |

||

| + | On demo cluster where all command were executed heka is runing in screen and was restarted manually so output of commands may be different. |

||

| + | ====Data flow==== |

||

| − | This rule creates notifications in log file if message match regexp: |

||

| + | We can follow data flow and see cpu_idle on each step. |

||

| + | First, let's check collectd with enabled debugging. (Debugging of collectd is described detailed in [http://wiki.sirmax.noname.com.ua/index.php/Collectd#Debug_with_your_own_write_plugin Collectd] document ) |

||

<PRE> |

<PRE> |

||

| + | collectd.Values(type='cpu',type_instance='idle',plugin='cpu',plugin_instance='0',host='node-6',time=1455198002.296594,interval=10.0,values=[29482997]) |

||

| − | [2016-01-20 14:00:54] Notification: severity = OKAY, host = node-6, plugin = pacemaker_resource, type = gauge, type_instance = vip__management, message = {"resource":"vip__management","value":1} |

||

</PRE> |

</PRE> |

||

| + | Next, we can see this data in heka: |

||

| − | ====Debug==== |

||

| + | <PRE> |

||

| − | =====Debug http traffic===== |

||

| + | :Timestamp: 2016-02-11 12:17:12.296999936 +0000 UTC |

||

| − | It is possible to debug data tranfering from collectd to hekad. e.g. you can use tcpflow or you favorite tool to dump http traffic |

||

| + | :Type: metric |

||

| − | <BR>Run dumping tool: |

||

| + | :Hostname: node-6 |

||

| − | * heka is listen on port 8325, taken from write_http config |

||

| + | :Pid: 22518 |

||

| − | * lo interface is loopback, heka is listen on 127.0.0.1, so it is easy to find interface |

||

| + | :Uuid: d40bce11-ccb5-4d52-a7d0-7927424b2709 |

||

| − | ** {{Root|<nowiki> |

||

| + | :Logger: collectd |

||

| − | # ip ro get 127.0.0.1 |

||

| + | :Payload: {"type":"cpu","values":[62.2994],"type_instance":"idle","dsnames":["value"],"plugin":"cpu","time":1455193032.297,"interval":10,"host":"node-6","dstypes":["derive"],"plugin_instance":"0"} |

||

| − | local 127.0.0.1 dev lo src 127.0.0.1 |

||

| + | :EnvVersion: |

||

| − | cache <local> |

||

| + | :Severity: 6 |

||

| − | </nowiki>}} |

||

| + | :Fields: |

||

| − | **<B>dev lo</B> is device you need. |

||

| + | | name:"type" type:string value:"derive" |

||

| − | * {{Root|<nowiki># tcpflow -i lo port 8325 </nowiki>}} |

||

| + | | name:"source" type:string value:"cpu" |

||

| − | * Example of output: {{Root|<nowiki># cat 127.000.000.001.45848-127.000.000.001.08325 | head -8 |

||

| + | | name:"deployment_mode" type:string value:"ha_compact" |

||

| − | POST / HTTP/1.1 |

||

| + | | name:"deployment_id" type:string value:"3" |

||

| − | User-Agent: collectd/5.4.0.git |

||

| + | | name:"openstack_roles" type:string value:"primary-controller" |

||

| − | Host: 127.0.0.1:8325 |

||

| + | | name:"openstack_release" type:string value:"2015.1.0-7.0" |

||

| − | Accept: */* |

||

| + | | name:"tag_fields" type:string value:"cpu_number" |

||

| − | Content-Type: application/json |

||

| + | | name:"openstack_region" type:string value:"RegionOne" |

||

| − | Content-Length: 4064 |

||

| + | | name:"name" type:string value:"cpu_idle" |

||

| + | | name:"hostname" type:string value:"node-6" |

||

| + | | name:"value" type:double value:62.2994 |

||

| + | | name:"environment_label" type:string value:"test2" |

||

| + | | name:"interval" type:double value:10 |

||

| + | | name:"cpu_number" type:string value:"0" |

||

| + | </PRE> |

||

| + | This message is sent to afd_node_controller_cpu_filter: |

||

| + | <PRE> |

||

| + | filter-afd_node_controller_cpu.toml:message_matcher = "(Type == 'metric' || Type == 'heka.sandbox.metric') && (Fields[name] == 'cpu_idle' || Fields[name] == 'cpu_wait')" |

||

| + | </PRE> |

||

| + | And filter generates alarm: |

||

| + | <PRE> |

||

| + | :Timestamp: 2016-02-11 13:30:46 +0000 UTC |

||

| + | :Type: heka.sandbox.afd_node_metric |

||

| + | :Hostname: node-6 |

||

| + | :Pid: 0 |

||

| + | :Uuid: d28b4847-310f-400d-a2ef-66b59b69cfe4 |

||

| + | :Logger: afd_node_controller_cpu_filter |

||

| + | :Payload: {"alarms":[{"periods":1,"tags":{},"severity":"CRITICAL","window":120,"operator":"<=","function":"avg","fields":{},"metric":"cpu_idle","message":"The CPU usage is too high (controller node).","threshold":150,"value":50.740816666667}]} |

||

| + | :EnvVersion: |

||

| + | :Severity: 7 |

||

| + | :Fields: |

||

| + | | name:"environment_label" type:string value:"test2" |

||

| + | | name:"source" type:string value:"cpu" |

||

| + | | name:"node_role" type:string value:"controller" |

||

| + | | name:"openstack_release" type:string value:"2015.1.0-7.0" |

||

| + | | name:"tag_fields" type:string value:["node_role","source"] |

||

| + | | name:"openstack_region" type:string value:"RegionOne" |

||

| + | | name:"name" type:string value:"node_status" |

||

| + | | name:"hostname" type:string value:"node-6" |

||

| + | | name:"deployment_mode" type:string value:"ha_compact" |

||

| + | | name:"openstack_roles" type:string value:"primary-controller" |

||

| + | | name:"deployment_id" type:string value:"3" |

||

| + | | name:"value" type:double value:3 |

||

| + | </PRE> |

||

| + | This message is 'outputted' to nagios with nagios_afd_nodes_output plugin: |

||

| + | <PRE> |

||

| + | [nagios_afd_nodes_output] |

||

| + | type = "HttpOutput" |

||

| + | message_matcher = "Fields[aggregator] == NIL && Type == 'heka.sandbox.afd_node_metric'" |

||

| + | encoder = "nagios_afd_nodes_encoder" |

||

| + | <SKIP> |

||

| + | </PRE> |

||

| + | ====Result==== |

||

| + | In nagios we can see alert: |

||

| + | <BR> |

||

| + | [[Изображение:04 Nagios Core 2016-02-11 16-54-17.png|600px]] |

||

| + | <BR> |

||

| + | As you can see threshold is 150 as we configured: |

||

| + | <BR> |

||

| + | [[Изображение:05 Nagios Core 2016-02-11 16-59-15.png|600px]] |

||

| + | ===Create new alarm=== |

||

| − | [{"values":[2160],"dstypes":["gauge"],"dsnames":["value"],"time":1453203196.259,"interval":10.000,"host":"node-7","plugin":"processes","plugin_instance":"collectd","type":"ps_stacksize","type_instance":""},{"values":[0,1999.74],"dstypes":["derive","derive"],"dsnames": |

||

| − | ... |

||

| − | skip |

||

| − | ... |

||

| − | </nowiki>}} |

||

| − | =====Debug with unixsock plugin===== |

||

| − | One more way to get some debug information is using collectd-unixsock (https://collectd.org/documentation/manpages/collectd-unixsock.5.shtml) |

||

| + | ====Data Flow==== |

||

| − | add config, restart collectd |

||

| + | =====Data in Collectd===== |

||

| + | In Collectd we need to collect data. For example we are using [http://wiki.sirmax.noname.com.ua/index.php/Collectd#Custom_read_Plugin Read plugin] witch just read data from file. |

||

| + | <BR>Example of data provided by plugin: |

||

<PRE> |

<PRE> |

||

| + | collectd.Values(type='read_data',type_instance='read_data',plugin='read_file_demo_plugin',plugin_instance='read_file_plugin_instance',host='node-6',time=1455205416.4896111,interval=10.0,values=[888999888.0],meta={'0': True}) |

||

| − | # cat 98-unixsock.conf |

||

| + | read_file_demo_plugin (read_data): 888999888.000000 |

||

| − | <LoadPlugin unixsock> |

||

| + | </PRE> |

||

| − | Globals false |

||

| + | =====Data in Heka===== |

||

| − | </LoadPlugin> |

||

| + | Data comes from collectd: |

||

| − | |||

| + | <PRE> |

||

| − | <Plugin unixsock> |

||

| + | :Timestamp: 2016-02-11 15:45:36.490000128 +0000 UTC |

||

| − | SocketFile "/var/run/collectd-unixsock" |

||

| + | :Type: metric |

||

| − | SocketGroup "collectd" |

||

| + | :Hostname: node-6 |

||

| − | SocketPerms "0770" |

||

| + | :Pid: 22518 |

||

| − | DeleteSocket true |

||

| + | :Uuid: ab07cf60-55b6-41c9-a530-4e88dbe6ebc8 |

||

| − | </Plugin> |

||

| + | :Logger: collectd |

||

| + | :Payload: {"type":"read_data","values":[889000000],"type_instance":"read_data","meta":{"0":true},"dsnames":["value"],"plugin":"read_file_demo_plugin","time":1455205536.49,"interval":10,"host":"node-6","dstypes":["gauge"],"plugin_instance":"read_file_plugin_instance"} |

||

| + | :EnvVersion: |

||

| + | :Severity: 6 |

||

| + | :Fields: |

||

| + | | name:"environment_label" type:string value:"test2" |

||

| + | | name:"source" type:string value:"read_file_demo_plugin" |

||

| + | | name:"deployment_mode" type:string value:"ha_compact" |

||

| + | | name:"openstack_release" type:string value:"2015.1.0-7.0" |

||

| + | | name:"openstack_roles" type:string value:"primary-controller" |

||

| + | | name:"openstack_region" type:string value:"RegionOne" |

||

| + | | name:"name" type:string value:"read_data_read_data" |

||

| + | | name:"hostname" type:string value:"node-6" |

||

| + | | name:"value" type:double value:8.89e+08 |

||

| + | | name:"deployment_id" type:string value:"3" |

||

| + | | name:"type" type:string value:"gauge" |

||

| + | | name:"interval" type:double value:10 |

||

</PRE> |

</PRE> |

||

| + | ====Filter configuration==== |

||

| − | |||

| + | Configure filter manually: |

||

| + | * one more instance of afd.lua |

||

<PRE> |

<PRE> |

||

| + | [afd_node_controller_read_data_filter] |

||

| − | #collectdctl listval |

||

| + | type = "SandboxFilter" |

||

| − | node-6/apache-localhost/apache_bytes |

||

| + | filename = "/usr/share/lma_collector/filters/afd.lua" |

||

| − | node-6/apache-localhost/apache_connections |

||

| + | preserve_data = false |

||

| − | node-6/apache-localhost/apache_idle_workers |

||

| + | message_matcher = "(Type == 'metric' || Type == 'heka.sandbox.metric') && (Fields[name] == 'read_data_read_data')" |

||

| − | node-6/apache-localhost/apache_requests |

||

| + | ticker_interval = 10 |

||

| − | node-6/apache-localhost/apache_scoreboard-closing |

||

| + | [afd_node_controller_read_data_filter.config] |

||

| − | node-6/apache-localhost/apache_scoreboard-dnslookup |

||

| + | hostname = 'node-6' |

||

| − | node-6/apache-localhost/apache_scoreboard-finishing |

||

| + | afd_type = 'node' |

||

| − | node-6/apache-localhost/apache_scoreboard-idle_cleanup |

||

| + | afd_file = 'lma_alarms_read_data' |

||

| − | node-6/apache-localhost/apache_scoreboard-keepalive |

||

| + | afd_cluster_name = 'controller' |

||

| − | node-6/apache-localhost/apache_scoreboard-logging |

||

| + | afd_logical_name = 'read_data' |

||

| − | node-6/apache-localhost/apache_scoreboard-open |

||

| − | node-6/apache-localhost/apache_scoreboard-reading |

||

| − | node-6/apache-localhost/apache_scoreboard-sending |

||

| − | node-6/apache-localhost/apache_scoreboard-starting |

||

| − | node-6/apache-localhost/apache_scoreboard-waiting |

||

| − | node-6/check_openstack_api-cinder-api/gauge-RegionOne |

||

| − | ... |

||

| − | Skip |

||

| − | ... |

||

</PRE> |

</PRE> |

||

| + | Also we need configure alarm definition (because it is new alarm. In case of existing it is generated by puppet) |

||

| + | <BR>File <B>/usr/share/heka/lua_modules/lma_alarms_read_data.lua</B> |

||

| + | <PRE>local M = {} |

||

| + | setfenv(1, M) -- Remove external access to contain everything in the module |

||

| + | local alarms = { |

||

| + | { |

||

| + | ['name'] = 'cpu-critical-controller', |

||

| + | ['description'] = 'Read data (controller node).', |

||

| + | ['severity'] = 'critical', |

||

| + | ['trigger'] = { |

||

| + | ['logical_operator'] = 'or', |

||

| + | ['rules'] = { |

||

| + | { |

||

| + | ['metric'] = 'read_data_read_data', |

||

| + | ['fields'] = { |

||

| + | }, |

||

| + | ['relational_operator'] = '<=', |

||

| + | ['threshold'] = '150', |

||

| + | ['window'] = '120', |

||

| + | ['periods'] = '0', |

||

| + | ['function'] = 'avg', |

||

| + | }, |

||

| + | }, |

||

| + | }, |

||

| + | }, |

||

| + | } |

||

| + | return alarms |

||

| + | </PRE> |

||

| + | ====Nagios Configuration==== |

||

| + | Also we need to add service and command definition to Nagios. |

||

| + | * Command definition (lma_services_commands.cfg) |

||

<PRE> |

<PRE> |

||

| + | define command { |

||

| − | # collectdctl getval node-6/swap/swap-free |

||

| + | command_line /usr/lib/nagios/plugins/check_dummy 3 'No data received for at least 130 seconds' |

||

| − | value=1.923355e+09 |

||

| + | command_name return-unknown-node-6.controller.read_data |

||

| + | } |

||

</PRE> |

</PRE> |

||

| + | * Service definition (lma_services.cfg) |

||

| + | <PRE>define service { |

||

| + | active_checks_enabled 0 |

||

| + | check_command return-unknown-node-6.controller.read_data |

||

| + | check_freshness 1 |

||

| + | check_interval 1 |

||

| + | contact_groups openstack |

||

| + | freshness_threshold 65 |

||

| + | host_name node-6 |

||

| + | max_check_attempts 2 |

||

| + | notifications_enabled 0 |

||

| + | passive_checks_enabled 1 |

||

| + | process_perf_data 0 |

||

| + | retry_interval 1 |

||

| + | service_description controller.read_data |

||

| + | use generic-service |

||

| + | }</PRE> |

||

| − | === |

+ | ====Results==== |

| + | Collectd read from file /var/log/collectd_in_data, so to check "OK" state we need to put any number > 150. 150 is threshold configured in alarm. |

||

| − | All config files are in /etc/collectd/ |

||

| − | <BR> |

||

| − | /etc/collectd/conf.d stores plugin configuration files |

||

<PRE> |

<PRE> |

||

| + | echo 15188899 > /var/log/collectd_in_data |

||

| − | # ls -lsa /etc/collectd/conf.d/ |

||

| − | 4 -rw-r----- 1 root root 169 Jan 18 16:38 05-logfile.conf |

||

| − | 4 -rw-r----- 1 root root 71 Jan 18 16:38 10-cpu.conf |

||

| − | 4 -rw-r----- 1 root root 289 Jan 18 16:38 10-df.conf |

||

| − | 4 -rw-r----- 1 root root 145 Jan 18 16:38 10-disk.conf |

||

| − | 4 -rw-r----- 1 root root 189 Jan 18 16:38 10-interface.conf |

||

| − | 4 -rw-r----- 1 root root 72 Jan 18 16:38 10-load.conf |

||

| − | 4 -rw-r----- 1 root root 74 Jan 18 16:38 10-memory.conf |

||

| − | 4 -rw-r----- 1 root root 77 Jan 18 16:38 10-processes.conf |

||

| − | 4 -rw-r----- 1 root root 138 Jan 18 16:38 10-swap.conf |

||

| − | 4 -rw-r----- 1 root root 73 Jan 18 16:38 10-users.conf |

||

| − | 4 -rw-r----- 1 root root 189 Jan 18 16:38 10-write_http.conf |

||

| − | 4 -rw-r----- 1 root root 66 Jan 18 16:38 processes-config.conf |

||

</PRE> |

</PRE> |

||

| + | So data feneratyed by plugin is: |

||

| − | On controller there are more metrics: |

||

<PRE> |

<PRE> |

||

| + | :Timestamp: 2016-02-11 16:13:18 +0000 UTC |

||

| − | d# ls -1 |

||

| + | :Type: heka.sandbox.afd_node_metric |

||

| − | 05-logfile.conf |

||

| + | :Hostname: node-6 |

||

| − | 10-apache.conf |

||

| + | :Pid: 0 |

||

| − | 10-cpu.conf |

||

| + | :Uuid: 7f17e0fe-d8c5-477d-a6c4-64e9234fbd93 |

||

| − | 10-dbi.conf |

||

| + | :Logger: afd_node_controller_read_data_filter |

||

| − | 10-df.conf |

||

| + | :Payload: {"alarms":[]} |

||

| − | 10-disk.conf |

||

| + | :EnvVersion: |

||

| − | 10-interface.conf |

||

| + | :Severity: 7 |

||

| − | 10-load.conf |

||

| + | :Fields: |

||

| − | 10-match_regex.conf |

||

| + | | name:"environment_label" type:string value:"test2" |

||

| − | 10-memcached.conf |

||

| + | | name:"source" type:string value:"read_data" |

||

| − | 10-memory.conf |

||

| + | | name:"node_role" type:string value:"controller" |

||

| − | 10-mysql.conf |

||

| + | | name:"openstack_release" type:string value:"2015.1.0-7.0" |

||

| − | 10-processes.conf |

||

| + | | name:"tag_fields" type:string value:["node_role","source"] |

||

| − | 10-swap.conf |

||

| + | | name:"openstack_region" type:string value:"RegionOne" |

||

| − | 10-target_notification.conf |

||

| + | | name:"name" type:string value:"node_status" |

||

| − | 10-users.conf |

||

| + | | name:"hostname" type:string value:"node-6" |

||

| − | 10-write_http.conf |

||

| + | | name:"deployment_mode" type:string value:"ha_compact" |

||

| − | 99-chain-PostCache.conf |

||

| + | | name:"openstack_roles" type:string value:"primary-controller" |

||

| − | dbi_cinder_services.conf |

||

| + | | name:"deployment_id" type:string value:"3" |

||

| − | dbi_mysql_status.conf |

||

| + | | name:"value" type:double value:0 |

||

| − | dbi_neutron_agents.conf |

||

| + | | name:"aggregator" type:string value:"present" |

||

| − | dbi_nova_services.conf |

||

| − | mysql-nova.conf |

||

| − | openstack.conf |

||

| − | processes-config.conf |

||

</PRE> |

</PRE> |

||

| + | And in nagios we can see "OK" status: |

||

| − | |||

| − | ==Heka== |

||

| − | Heka is an open source stream processing software system developed by Mozilla. Heka is a “Swiss Army Knife” type tool for data processing, useful for a wide variety of different tasks, such as: |

||

| − | * Loading and parsing log files from a file system. |

||

| − | * Accepting statsd type metrics data for aggregation and forwarding to upstream time series data stores such as graphite or InfluxDB. |

||

| − | * Launching external processes to gather operational data from the local system. |

||

| − | * Performing real time analysis, graphing, and anomaly detection on any data flowing through the Heka pipeline. |

||

| − | * Shipping data from one location to another via the use of an external transport (such as AMQP) or directly (via TCP). |

||

| − | * Delivering processed data to one or more persistent data stores. |

||

| − | ===Inputs=== |

||

| − | There are 2 types of input plugins used in heka |

||

| − | * HttpListenInput |

||

| − | ** 127.0.0.1:8325; collectd_decoder |

||

| − | * LogstreamerInput |

||

| − | ** /var/log/libvirt; libvirt_decoder |

||

| − | ** file_match = '(?P<Service>nova|cinder|keystone|glance|heat|neutron|murano)-all\.log$', openstack_decoder |

||

| − | ** "/var/log/dashboard\.log$'; decoder = "openstack_decoder"; splitter = "TokenSplitter" |

||

| − | ** file_match = '(?P<Service>nova|cinder|keystone|glance|heat|neutron|murano)-all\.log$'; differentiator = [ 'openstack.', 'Service' ]; decoder = "openstack_decoder"; splitter = "openstack_splitter" |

||

| − | **file_match = '(?P<Service>ovs\-vswitchd|ovsdb\-server)\.log$';differentiator = [ 'Service' ];decoder = "ovs_decoder";splitter = "TokenSplitter" |

||

| − | **file_match = '(?P<Service>daemon\.log|cron\.log|haproxy\.log|kern\.log|auth\.log|syslog|messages|debug)';differentiator = [ 'system.', 'Service' ];decoder = "system_decoder" |

||

| − | |||

| − | |||

| − | ===Splitters=== |

||

| − | Splitter details: https://hekad.readthedocs.org/en/v0.10.0/config/splitters/index.html |

||

<BR> |

<BR> |

||

| + | [[Изображение:08 Nagios Core 2016-02-11 21-58-30.png|600px]] |

||

| − | There are only one custom splitter: |

||

<BR> |

<BR> |

||

| + | * Next, we can simulate CRITICAL state |

||

<PRE> |

<PRE> |

||

| + | echo 1 > /var/log/collectd_in_data |

||

| − | [openstack_splitter] |

||

| − | type = "RegexSplitter" |

||

| − | delimiter = '(<[0-9]+>)' |

||

| − | delimiter_eol = false |

||

</PRE> |

</PRE> |

||

| + | Data in heka: |

||

| − | ===Decoders=== |

||

<PRE> |

<PRE> |

||

| + | :Timestamp: 2016-02-11 16:44:53 +0000 UTC |

||

| − | decoder-collectd.toml |

||

| + | :Type: heka.sandbox.afd_node_metric |

||

| − | decoder-libvirt.toml |

||

| + | :Hostname: node-6 |

||

| − | decoder-openstack.toml |

||

| + | :Pid: 0 |

||

| − | decoder-ovs.toml |

||

| + | :Logger: afd_node_controller_read_data_filter |

||

| − | decoder-system.toml |

||

| + | :Payload: {"alarms":[{"periods":1,"tags":{},"severity":"CRITICAL","window":120,"operator":"<=","function":"avg","fields":{},"metric":"read_data_read_data","message":"Read data (controller node).","threshold":150,"value":1}]} |

||

| + | :EnvVersion: |

||

| + | :Severity: 7 |

||

| + | :Fields: |

||

| + | | name:"environment_label" type:string value:"test2" |

||

| + | | name:"source" type:string value:"read_data" |

||

| + | | name:"node_role" type:string value:"controller" |

||

| + | | name:"openstack_release" type:string value:"2015.1.0-7.0" |

||

| + | | name:"tag_fields" type:string value:["node_role","source"] |

||

| + | | name:"openstack_region" type:string value:"RegionOne" |

||

| + | | name:"name" type:string value:"node_status" |

||

| + | | name:"hostname" type:string value:"node-6" |

||

| + | | name:"deployment_mode" type:string value:"ha_compact" |

||

| + | | name:"openstack_roles" type:string value:"primary-controller" |

||

| + | | name:"deployment_id" type:string value:"3" |

||

| + | | name:"value" type:double value:3 |

||

| + | | name:"aggregator" type:string value:"present" |

||

</PRE> |

</PRE> |

||

| + | [[Изображение:09 Nagios Core 2016-02-11 21-59-03.png|600px]] |

||

| + | |||

| + | ==GO DEEPER!== |

||

| + | Next will be described all parts of LMA. |

||

| + | ===Collectd=== |

||

| + | Collectd is collecting data, all details about collect are in separate document. |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Collectd Collectd in LMA detailed review] |

||

| + | |||

| + | ===Heka=== |

||

| + | Heka is comolex tool so data flow in Heka is described in separate documents and divided on parts |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Heka Heka in general] |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Heka_Inputs Heka inputs details] |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Heka_Splitters Heka Splitters ] |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Heka_Decoders Heka Decoders] |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Heka_Debugging Heka debuging review] |

||

| + | * [http://wiki.sirmax.noname.com.ua/index.php/Heka_Filter_afd_example How to create your own Heka filter, Output and Nagios integration] |

||

| + | |||

| + | ===Kibana and Grafana=== |

||

| + | TBD |

||

| + | |||

| + | ===Nagios=== |

||

| + | Passive checks overview: ToBeDone! |

||

Текущая версия на 13:37, 12 февраля 2016

Monitorimg

Any complicated system need to be monitored.

LMA (Logging Monitoring Alerting) Fuel Plugins provides complex logging, monitoring

and alerting system for Mirantis Openstack. LMA use open-source products and can be also integrated with currently existing monitoring systems.

In this manual will be described complex configuration include 4 plugins:

- ElasticSearch/Kibana – Log Search, Filtration and Analysis

- LMA Collector – LMA Toolchain Data Aggregation Client

- InfluxDB/Grafana – Time-Series Event Recording and Analysis

- LMA Nagios – Alerting for LMA Infrastructure

(more details about MOS fuel plugins: MOS Plugins overview

It is possible to use LMA-related plugins separately but the are designed to be used in complex. In this document all examples are from cloud where all 4 plugins are installed and configured.

LMA DataFlow

LMA is complex system and contains the follwing parts:

- Collectd - data collecting

- Heka - data collecting and aggregation

- InluxDB - non-SQL database for time-based data, in LMA it is is used for charts in Grafana

- ElasticSearch - non-SQL database, in LMA it is used to save logs.

- Grafana - Graph and dashboard builder

- Kibana - Analytics and visualization platform

- Nagios - Monitoring system

+--------------------------------+ Grafana dashboard Kibana Dashboard

|Node N (compute or controller) | ------------------- ----------------------

| | ^ ^

|* collectd --->---+ | | |

| | | +-<- Some data generated locally is looped to-<+ | |

| | | | to be aggregated | | |

|* hekad <---<---+ | | ^ | |

| | | +-------------------------------------------+ | ^ |

| +------------->To Aggregator|------>| Heka on aggregator (Controller with VIP) |---+------------+ | |

| | | +-------------------------------------------+ | |

| | | |to |to |to | |

| | | |Influx |ElasticSearch |Nagios | |

| +---------------------------|->-------->--- +-------->--|------->------|--->---------->---------->--------------[ InfuxDB ] ^

| | | | | |

| | | | | |

| +---------------------------|------------------------>--+------->------|--->---------->---------->--------------[ ElasticSearch ]-------------------------------+

| | |

+--------------------------------+ |

+----------------------------------------[ Nagios ]---> Alerts (e.g. email notifications)

AFD and GSE

Overview

The process of running alarms in LMA is not centralized (like it is often the case in conventional monitoring systems) but distributed across all the Collectors.

Each Collector is individuallly responsible for monitoring the resources and the services that are deployed on the node and for reporting any anomaly or fault it may have detected to the Aggregator.

The anomaly and fault detection logic in LMA is designed more like an “Expert System” in that the Collector and the Aggregator use facts and rules that are executed within the Heka’s stream processing pipeline.

The facts are the messages ingested by the Collector into the Heka pipeline. The rules are either threshold moni- toring alarms or aggregation and correlation rules.

Both are declaratively defined in YAML(tm) files that you can modify. Those rules are executed by a collection of Heka filter plugins written in Lua that are organised according to a configurable processing workflow.

We call these plugins the AFD plugins for Anomaly and Fault Detection plugins and the GSE plugins for Global Status Evaluation plugins.

Both the AFD and GSE plugins in turn create metrics called the AFD metrics and the GSE metrics respectively.

The AFD metrics contain information about the health status of a resource like a device, a system component like a filesystem, or service like an API endpoint, at the node level.

Then, those AFD metrics are sent on a regular basis by each Collector to the Aggregator where they can be aggregated and correlated hence the name of aggregator.

E.g.

The GSE metrics contain information about the health status of a service cluster, like the Nova API endpoints cluster, or the RabbitMQ cluster as well as the clusters of nodes, like the Compute cluster or Controller cluster.

The health status of a cluster is inferred by the GSE plugins using aggregation and correlation rules and facts contained in the AFD metrics it receives from the Collectors.

Modifying CPU alarm

Modification of existing alarm is detailed explained in LMA collector plugin documentation.

So here is an example with commands, output and explanation.

Modify alarm

For test we can modify existing cpu alarm.

To be sure it always be in 'CRITICAL' state we can set cpu_idle > 100%. Of course it is just for demo.

So in /etc/hiera/override/alarming.yaml file we replace cpu idle threshold with 150 with mean '150% of cpu idle'.

lma_collector:

alarms:

- name: 'cpu-critical-controller'

description: 'The CPU usage is too high (controller node).'

severity: 'critical'

enabled: 'true'

trigger:

logical_operator: 'or'

rules:

- metric: cpu_idle

relational_operator: '<='

threshold: 150

window: 120

periods: 0

function: avg

<SKIP>

Next we need to run puppet to rebuild lma configuration.

puppet apply --modulepath=/etc/fuel/plugins/lma_collector-0.8/puppet/modules/ /etc/fuel/plugins/lma_collector-0.8/puppet/manifests/configure_afd_filters.pp

Heka need to be restarted so please check heka's start time:

ps -auxfw | grep heka ... heka 22518 4.5 4.4 809992 134068 pts/25 Sl+ 12:02 6:30 \_ hekad -config /etc/lma_collector/ root@node-6:/etc/hiera/override# date Thu Feb 11 12:04:47 UTC 2016

On demo cluster where all command were executed heka is runing in screen and was restarted manually so output of commands may be different.

Data flow

We can follow data flow and see cpu_idle on each step. First, let's check collectd with enabled debugging. (Debugging of collectd is described detailed in Collectd document )

collectd.Values(type='cpu',type_instance='idle',plugin='cpu',plugin_instance='0',host='node-6',time=1455198002.296594,interval=10.0,values=[29482997])

Next, we can see this data in heka:

:Timestamp: 2016-02-11 12:17:12.296999936 +0000 UTC

:Type: metric

:Hostname: node-6

:Pid: 22518

:Uuid: d40bce11-ccb5-4d52-a7d0-7927424b2709

:Logger: collectd

:Payload: {"type":"cpu","values":[62.2994],"type_instance":"idle","dsnames":["value"],"plugin":"cpu","time":1455193032.297,"interval":10,"host":"node-6","dstypes":["derive"],"plugin_instance":"0"}

:EnvVersion:

:Severity: 6

:Fields:

| name:"type" type:string value:"derive"

| name:"source" type:string value:"cpu"

| name:"deployment_mode" type:string value:"ha_compact"

| name:"deployment_id" type:string value:"3"

| name:"openstack_roles" type:string value:"primary-controller"

| name:"openstack_release" type:string value:"2015.1.0-7.0"

| name:"tag_fields" type:string value:"cpu_number"

| name:"openstack_region" type:string value:"RegionOne"

| name:"name" type:string value:"cpu_idle"

| name:"hostname" type:string value:"node-6"

| name:"value" type:double value:62.2994

| name:"environment_label" type:string value:"test2"

| name:"interval" type:double value:10

| name:"cpu_number" type:string value:"0"

This message is sent to afd_node_controller_cpu_filter:

filter-afd_node_controller_cpu.toml:message_matcher = "(Type == 'metric' || Type == 'heka.sandbox.metric') && (Fields[name] == 'cpu_idle' || Fields[name] == 'cpu_wait')"

And filter generates alarm:

:Timestamp: 2016-02-11 13:30:46 +0000 UTC

:Type: heka.sandbox.afd_node_metric

:Hostname: node-6

:Pid: 0

:Uuid: d28b4847-310f-400d-a2ef-66b59b69cfe4

:Logger: afd_node_controller_cpu_filter

:Payload: {"alarms":[{"periods":1,"tags":{},"severity":"CRITICAL","window":120,"operator":"<=","function":"avg","fields":{},"metric":"cpu_idle","message":"The CPU usage is too high (controller node).","threshold":150,"value":50.740816666667}]}

:EnvVersion:

:Severity: 7

:Fields:

| name:"environment_label" type:string value:"test2"

| name:"source" type:string value:"cpu"

| name:"node_role" type:string value:"controller"

| name:"openstack_release" type:string value:"2015.1.0-7.0"

| name:"tag_fields" type:string value:["node_role","source"]

| name:"openstack_region" type:string value:"RegionOne"

| name:"name" type:string value:"node_status"

| name:"hostname" type:string value:"node-6"

| name:"deployment_mode" type:string value:"ha_compact"

| name:"openstack_roles" type:string value:"primary-controller"

| name:"deployment_id" type:string value:"3"

| name:"value" type:double value:3

This message is 'outputted' to nagios with nagios_afd_nodes_output plugin:

[nagios_afd_nodes_output] type = "HttpOutput" message_matcher = "Fields[aggregator] == NIL && Type == 'heka.sandbox.afd_node_metric'" encoder = "nagios_afd_nodes_encoder" <SKIP>

Result

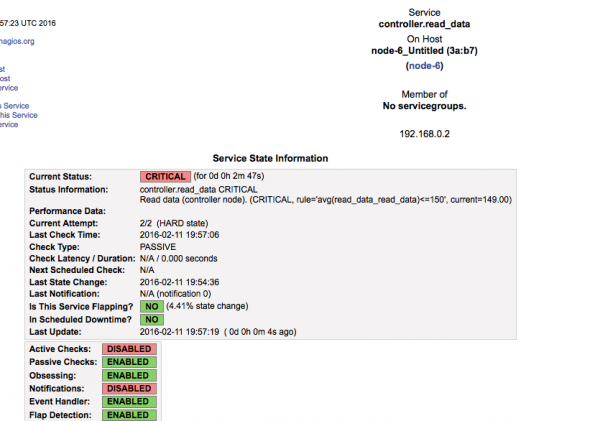

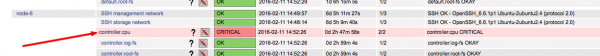

In nagios we can see alert:

As you can see threshold is 150 as we configured:

Create new alarm

Data Flow

Data in Collectd

In Collectd we need to collect data. For example we are using Read plugin witch just read data from file.

Example of data provided by plugin:

collectd.Values(type='read_data',type_instance='read_data',plugin='read_file_demo_plugin',plugin_instance='read_file_plugin_instance',host='node-6',time=1455205416.4896111,interval=10.0,values=[888999888.0],meta={'0': True})

read_file_demo_plugin (read_data): 888999888.000000

Data in Heka

Data comes from collectd:

:Timestamp: 2016-02-11 15:45:36.490000128 +0000 UTC

:Type: metric

:Hostname: node-6

:Pid: 22518

:Uuid: ab07cf60-55b6-41c9-a530-4e88dbe6ebc8

:Logger: collectd

:Payload: {"type":"read_data","values":[889000000],"type_instance":"read_data","meta":{"0":true},"dsnames":["value"],"plugin":"read_file_demo_plugin","time":1455205536.49,"interval":10,"host":"node-6","dstypes":["gauge"],"plugin_instance":"read_file_plugin_instance"}

:EnvVersion:

:Severity: 6

:Fields:

| name:"environment_label" type:string value:"test2"

| name:"source" type:string value:"read_file_demo_plugin"

| name:"deployment_mode" type:string value:"ha_compact"

| name:"openstack_release" type:string value:"2015.1.0-7.0"

| name:"openstack_roles" type:string value:"primary-controller"

| name:"openstack_region" type:string value:"RegionOne"

| name:"name" type:string value:"read_data_read_data"

| name:"hostname" type:string value:"node-6"

| name:"value" type:double value:8.89e+08

| name:"deployment_id" type:string value:"3"

| name:"type" type:string value:"gauge"

| name:"interval" type:double value:10

Filter configuration

Configure filter manually:

- one more instance of afd.lua

[afd_node_controller_read_data_filter]

type = "SandboxFilter"

filename = "/usr/share/lma_collector/filters/afd.lua"

preserve_data = false

message_matcher = "(Type == 'metric' || Type == 'heka.sandbox.metric') && (Fields[name] == 'read_data_read_data')"

ticker_interval = 10

[afd_node_controller_read_data_filter.config]

hostname = 'node-6'

afd_type = 'node'

afd_file = 'lma_alarms_read_data'

afd_cluster_name = 'controller'

afd_logical_name = 'read_data'

Also we need configure alarm definition (because it is new alarm. In case of existing it is generated by puppet)

File /usr/share/heka/lua_modules/lma_alarms_read_data.lua

local M = {}

setfenv(1, M) -- Remove external access to contain everything in the module

local alarms = {

{

['name'] = 'cpu-critical-controller',

['description'] = 'Read data (controller node).',

['severity'] = 'critical',

['trigger'] = {

['logical_operator'] = 'or',

['rules'] = {

{

['metric'] = 'read_data_read_data',

['fields'] = {

},

['relational_operator'] = '<=',

['threshold'] = '150',

['window'] = '120',

['periods'] = '0',

['function'] = 'avg',

},

},

},

},

}

return alarms

Nagios Configuration

Also we need to add service and command definition to Nagios.

- Command definition (lma_services_commands.cfg)

define command {

command_line /usr/lib/nagios/plugins/check_dummy 3 'No data received for at least 130 seconds'

command_name return-unknown-node-6.controller.read_data

}

- Service definition (lma_services.cfg)

define service {

active_checks_enabled 0

check_command return-unknown-node-6.controller.read_data

check_freshness 1

check_interval 1

contact_groups openstack

freshness_threshold 65

host_name node-6

max_check_attempts 2

notifications_enabled 0

passive_checks_enabled 1

process_perf_data 0

retry_interval 1

service_description controller.read_data

use generic-service

}

Results

Collectd read from file /var/log/collectd_in_data, so to check "OK" state we need to put any number > 150. 150 is threshold configured in alarm.

echo 15188899 > /var/log/collectd_in_data

So data feneratyed by plugin is:

:Timestamp: 2016-02-11 16:13:18 +0000 UTC

:Type: heka.sandbox.afd_node_metric

:Hostname: node-6

:Pid: 0

:Uuid: 7f17e0fe-d8c5-477d-a6c4-64e9234fbd93

:Logger: afd_node_controller_read_data_filter

:Payload: {"alarms":[]}

:EnvVersion:

:Severity: 7

:Fields:

| name:"environment_label" type:string value:"test2"

| name:"source" type:string value:"read_data"

| name:"node_role" type:string value:"controller"

| name:"openstack_release" type:string value:"2015.1.0-7.0"

| name:"tag_fields" type:string value:["node_role","source"]

| name:"openstack_region" type:string value:"RegionOne"

| name:"name" type:string value:"node_status"

| name:"hostname" type:string value:"node-6"

| name:"deployment_mode" type:string value:"ha_compact"

| name:"openstack_roles" type:string value:"primary-controller"

| name:"deployment_id" type:string value:"3"

| name:"value" type:double value:0

| name:"aggregator" type:string value:"present"

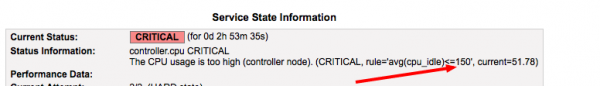

And in nagios we can see "OK" status:

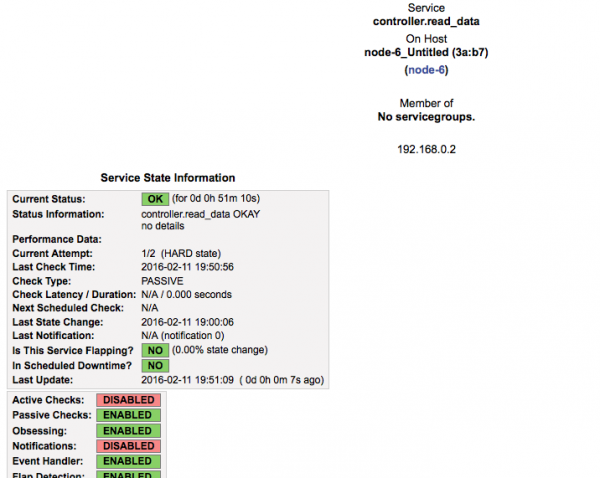

- Next, we can simulate CRITICAL state

echo 1 > /var/log/collectd_in_data

Data in heka:

:Timestamp: 2016-02-11 16:44:53 +0000 UTC

:Type: heka.sandbox.afd_node_metric

:Hostname: node-6

:Pid: 0

:Logger: afd_node_controller_read_data_filter

:Payload: {"alarms":[{"periods":1,"tags":{},"severity":"CRITICAL","window":120,"operator":"<=","function":"avg","fields":{},"metric":"read_data_read_data","message":"Read data (controller node).","threshold":150,"value":1}]}

:EnvVersion:

:Severity: 7

:Fields:

| name:"environment_label" type:string value:"test2"

| name:"source" type:string value:"read_data"

| name:"node_role" type:string value:"controller"

| name:"openstack_release" type:string value:"2015.1.0-7.0"

| name:"tag_fields" type:string value:["node_role","source"]

| name:"openstack_region" type:string value:"RegionOne"

| name:"name" type:string value:"node_status"

| name:"hostname" type:string value:"node-6"

| name:"deployment_mode" type:string value:"ha_compact"

| name:"openstack_roles" type:string value:"primary-controller"

| name:"deployment_id" type:string value:"3"

| name:"value" type:double value:3

| name:"aggregator" type:string value:"present"

GO DEEPER!

Next will be described all parts of LMA.

Collectd

Collectd is collecting data, all details about collect are in separate document.

Heka

Heka is comolex tool so data flow in Heka is described in separate documents and divided on parts

- Heka in general

- Heka inputs details

- Heka Splitters

- Heka Decoders

- Heka debuging review

- How to create your own Heka filter, Output and Nagios integration

Kibana and Grafana

TBD

Nagios

Passive checks overview: ToBeDone!