Openstack over k8s: различия между версиями

Sirmax (обсуждение | вклад) (→6666) |

Sirmax (обсуждение | вклад) |

||

| (не показана 31 промежуточная версия этого же участника) | |||

| Строка 1: | Строка 1: | ||

| + | [[Категория:Openstack]] |

||

| + | [[Категория:Linux]] |

||

| + | =Комплексное задание на знание K8s/Helm/OpenStack на 8 рабочих часов (1 день)= |

||

<PRE> |

<PRE> |

||

| − | + | * Install openstack(ceph as storage) on top of K8s(All-in-one-installation) using openstack-helm project |

|

| − | + | * change Keystone token expiration time afterwards to 24 hours |

|

| − | + | * deploy 3 VMs connected to each other using heat |

|

</PRE> |

</PRE> |

||

| + | ==TL; DR== |

||

| − | =install minikube= |

||

| + | * Мне понадобилось примерно 13 рабочих часов что бы закончить задние |

||

| − | =Install helm= |

||

| + | Хорошее: |

||

| + | * Задание можно сделать за 8 часов (и даже быстрее) |

||

| + | Плохое |

||

| − | brew install kubernetes-helm |

||

| + | * Практически невозможно сделать на ноутбуке без Linux. |

||

| + | * Примерно половину времени потрачено на попытку "взлететь" напрямую на Mac OS и использовать в качестве кластера K8s уже имевшийся minikube |

||

| + | Это был явный фейл - как минимум чарты ceph не совместимы с миникубом никак (https://github.com/ceph/ceph-helm/issues/73), до остальных я не дошел. <BR> |

||

| + | Деплоить без скриптовой обвязки явно заняло бы больше чем 1 день (на самом деле если не срезать углы пользуясь скриптами то минимум неделя) |

||

| + | * Когда я понял что задеплоить на миникуб за отведеннгое время не успеваю то решил настроить ВМку с убунтой и дальше работать с ней |

||

| + | Второй явный фейл (но не мой =) ) - то что задание требует минимум 8 гигов свободной памяти, |

||

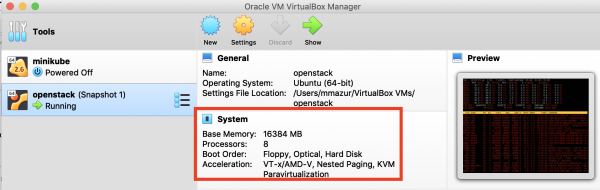

| + | а на самом деле даже на ВМке с 16-ю гигами и 8 ядрами все шевелилось очень медленно. (Человек с ноутом с 8 гигами не сделает это задание из-за недостатка памяти) |

||

| + | <BR> Как следствие - регулярные падения скриптов из-за таймаутов, |

||

| + | <BR>Отмечу так же что с не слишком быстрым интернетом я наступил на проблему, что Pull образов был медленным и скрипты не дожидались и падали по таймауту. <BR> |

||

| + | Хорошей идеей было бы скачать образы заранее, но об этом я подумал уже в середине процесса и тратить время на анализ какие образы нужны, не стал, |

||

| + | |||

| + | ==Решение== |

||

| + | |||

| + | Логи деплоймента вынесены в отдельный раздел в конце что б не загромождать документ. |

||

| + | ===Создание ВМки с Убунтой=== |

||

| + | https://docs.openstack.org/openstack-helm/latest/install/developer/requirements-and-host-config.html |

||

<PRE> |

<PRE> |

||

| + | System Requirements¶ |

||

| − | helm init |

||

| + | The recommended minimum system requirements for a full deployment are: |

||

| − | $HELM_HOME has been configured at /Users/mmazur/.helm. |

||

| + | 16GB of RAM |

||

| − | Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster. |

||

| + | 8 Cores |

||

| + | 48GB HDD |

||

| + | </PRE> |

||

| + | [[Изображение:VM-1.png|600px]] |

||

| − | Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy. |

||

| + | |||

| − | To prevent this, run `helm init` with the --tiller-tls-verify flag. |

||

| + | |||

| − | For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation |

||

| + | На этом этапе изначально я совершил 2 ошибки |

||

| − | Happy Helming! |

||

| + | * Создал слишком маленькую (мало CPU Cores) машину |

||

| + | * Не проверил пересечения сетей |

||

| + | <PRE> |

||

| + | Warning |

||

| + | By default the Calico CNI will use 192.168.0.0/16 and Kubernetes services will use 10.96.0.0/16 as the CIDR for services. |

||

| + | Check that these CIDRs are not in use on the development node before proceeding, or adjust as required. |

||

| + | </PRE> |

||

| + | Кстати, похоже что тут ошибка в маске в документации - реально используется маска <B>/12</B><BR> |

||

| + | Немного отредактированный для удобства чтения вывод ps |

||

| + | <PRE> |

||

| + | root 5717 4.0 1.7 448168 292172 ? Ssl 19:27 0:51 | \_ kube-apiserver --feature-gates=MountPropagation=true,PodShareProcessNamespace=true |

||

| + | --service-node-port-range=1024-65535 |

||

| + | --advertise-address=172.17.0.1 |

||

| + | --service-cluster-ip-range=10.96.0.0/12 |

||

| + | </PRE> |

||

| + | |||

| + | ===Подготовка=== |

||

| + | * https://docs.openstack.org/openstack-helm/latest/install/developer/kubernetes-and-common-setup.html |

||

| + | Если следовать инструкции и не пробовать ничего менять то никаких проблем на 18-й убунте не возникло. <BR> |

||

| + | |||

| + | ===Установка OpenStack=== |

||

| + | Если следовать инструкции то никаких проблем не возникает, за исключением таймаутов. |

||

| + | <BR> |

||

| + | Насколько я смог выяснить - все скрипты делают корректную зачистку и потому перезапуск достаточно безопасен. |

||

| + | <BR> |

||

| + | К сожалению я не сохранил список скриптов которые приходилось перезапускать |

||

| + | |||

| + | ===Проверка OpenStack=== |

||

| + | После окончания проверил самым простым способом - работает ли keystone: |

||

| + | |||

| + | <PRE> |

||

| + | root@openstack:~# export OS_CLOUD=openstack_helm |

||

| + | root@openstack:~# openstack token issue |

||

</PRE> |

</PRE> |

||

| + | На первый взгляд все PODы как минимум запустились |

||

<PRE> |

<PRE> |

||

| − | kubectl |

+ | kubectl -n openstack get pods |

| − | NAME READY STATUS RESTARTS AGE |

+ | NAME READY STATUS RESTARTS AGE |

| − | + | ceph-ks-endpoints-hkj77 0/3 Completed 0 3h |

|

| − | + | ceph-ks-service-l4wdx 0/1 Completed 0 3h |

|

| − | + | ceph-openstack-config-ceph-ns-key-generator-z82mk 0/1 Completed 0 17h |

|

| − | + | ceph-rgw-66685f585d-st7dp 1/1 Running 0 3h |

|

| − | + | ceph-rgw-storage-init-2vrpg 0/1 Completed 0 3h |

|

| − | + | cinder-api-85df68f5d8-j6mqh 1/1 Running 0 2h |

|

| − | + | cinder-backup-5f9598868-5kxxx 1/1 Running 0 2h |

|

| − | + | cinder-backup-storage-init-g627m 0/1 Completed 0 2h |

|

| − | + | cinder-bootstrap-r2295 0/1 Completed 0 2h |

|

| − | + | cinder-db-init-nk7jm 0/1 Completed 0 2h |

|

| + | cinder-db-sync-vlbcm 0/1 Completed 0 2h |

||

| + | cinder-ks-endpoints-cnwgb 0/9 Completed 0 2h |

||

| + | cinder-ks-service-6zs57 0/3 Completed 0 2h |

||

| + | cinder-ks-user-bp8zb 0/1 Completed 0 2h |

||

| + | cinder-rabbit-init-j97b7 0/1 Completed 0 2h |

||

| + | cinder-scheduler-6bfcd6476d-r87hm 1/1 Running 0 2h |

||

| + | cinder-storage-init-6ksjc 0/1 Completed 0 2h |

||

| + | cinder-volume-5fccd4cc5-dpxqm 1/1 Running 0 2h |

||

| + | cinder-volume-usage-audit-1549203300-25mkf 0/1 Completed 0 14m |

||

| + | cinder-volume-usage-audit-1549203600-hnh54 0/1 Completed 0 8m |

||

| + | cinder-volume-usage-audit-1549203900-v5t4w 0/1 Completed 0 4m |

||

| + | glance-api-745dc74457-42nwf 1/1 Running 0 3h |

||

| + | glance-bootstrap-j5wt4 0/1 Completed 0 3h |

||

| + | glance-db-init-lw97h 0/1 Completed 0 3h |

||

| + | glance-db-sync-dbp5s 0/1 Completed 0 3h |

||

| + | glance-ks-endpoints-gm5rw 0/3 Completed 0 3h |

||

| + | glance-ks-service-64jfj 0/1 Completed 0 3h |

||

| + | glance-ks-user-ftv9c 0/1 Completed 0 3h |

||

| + | glance-rabbit-init-m7b7k 0/1 Completed 0 3h |

||

| + | glance-registry-6cb86c767-2mkbx 1/1 Running 0 3h |

||

| + | glance-storage-init-m29p4 0/1 Completed 0 3h |

||

| + | heat-api-69db75bb6d-h24w9 1/1 Running 0 3h |

||

| + | heat-bootstrap-v9642 0/1 Completed 0 3h |

||

| + | heat-cfn-86896f7466-n5dnz 1/1 Running 0 3h |

||

| + | heat-db-init-lfrsb 0/1 Completed 0 3h |

||

| + | heat-db-sync-wct2x 0/1 Completed 0 3h |

||

| + | heat-domain-ks-user-4fg65 0/1 Completed 0 3h |

||

| + | heat-engine-6756c84fdd-44hzf 1/1 Running 0 3h |

||

| + | heat-engine-cleaner-1549203300-s48sb 0/1 Completed 0 14m |

||

| + | heat-engine-cleaner-1549203600-gffn4 0/1 Completed 0 8m |

||

| + | heat-engine-cleaner-1549203900-6hwvj 0/1 Completed 0 4m |

||

| + | heat-ks-endpoints-wxjwp 0/6 Completed 0 3h |

||

| + | heat-ks-service-v95sk 0/2 Completed 0 3h |

||

| + | heat-ks-user-z6xhb 0/1 Completed 0 3h |

||

| + | heat-rabbit-init-77nzb 0/1 Completed 0 3h |

||

| + | heat-trustee-ks-user-mwrf5 0/1 Completed 0 3h |

||

| + | heat-trusts-7x7nt 0/1 Completed 0 3h |

||

| + | horizon-5877548d5d-27t8c 1/1 Running 0 3h |

||

| + | horizon-db-init-jsjm5 0/1 Completed 0 3h |

||

| + | horizon-db-sync-wxwpw 0/1 Completed 0 3h |

||

| + | ingress-86cf786fd8-fbz8w 1/1 Running 4 18h |

||

| + | ingress-error-pages-7f574d9cd7-b5kwh 1/1 Running 0 18h |

||

| + | keystone-api-f658f747c-q6w65 1/1 Running 0 3h |

||

| + | keystone-bootstrap-ds8t5 0/1 Completed 0 3h |

||

| + | keystone-credential-setup-hrp8t 0/1 Completed 0 3h |

||

| + | keystone-db-init-dhgf2 0/1 Completed 0 3h |

||

| + | keystone-db-sync-z8d5d 0/1 Completed 0 3h |

||

| + | keystone-domain-manage-86b25 0/1 Completed 0 3h |

||

| + | keystone-fernet-rotate-1549195200-xh9lv 0/1 Completed 0 2h |

||

| + | keystone-fernet-setup-txgc8 0/1 Completed 0 3h |

||

| + | keystone-rabbit-init-jgkqz 0/1 Completed 0 3h |

||

| + | libvirt-427lp 1/1 Running 0 2h |

||

| + | mariadb-ingress-5cff98cbfc-24vjg 1/1 Running 0 17h |

||

| + | mariadb-ingress-5cff98cbfc-nqlhq 1/1 Running 0 17h |

||

| + | mariadb-ingress-error-pages-5c89b57bc-twn7z 1/1 Running 0 17h |

||

| + | mariadb-server-0 1/1 Running 0 17h |

||

| + | memcached-memcached-6d48bd48bc-7kd84 1/1 Running 0 3h |

||

| + | neutron-db-init-rvf47 0/1 Completed 0 2h |

||

| + | neutron-db-sync-6w7bn 0/1 Completed 0 2h |

||

| + | neutron-dhcp-agent-default-znxhn 1/1 Running 0 2h |

||

| + | neutron-ks-endpoints-47xs8 0/3 Completed 1 2h |

||

| + | neutron-ks-service-sqtwg 0/1 Completed 0 2h |

||

| + | neutron-ks-user-tpmrb 0/1 Completed 0 2h |

||

| + | neutron-l3-agent-default-5nbsp 1/1 Running 0 2h |

||

| + | neutron-metadata-agent-default-9ml6v 1/1 Running 0 2h |

||

| + | neutron-ovs-agent-default-mg8ln 1/1 Running 0 2h |

||

| + | neutron-rabbit-init-sgnwm 0/1 Completed 0 2h |

||

| + | neutron-server-9bdc765c9-bx6sf 1/1 Running 0 2h |

||

| + | nova-api-metadata-78fb54c549-zcmxg 1/1 Running 2 2h |

||

| + | nova-api-osapi-6c5c6dd4fc-7z5qq 1/1 Running 0 2h |

||

| + | nova-bootstrap-hp6n4 0/1 Completed 0 2h |

||

| + | nova-cell-setup-1549195200-v5bv8 0/1 Completed 0 2h |

||

| + | nova-cell-setup-1549198800-6d8sm 0/1 Completed 0 1h |

||

| + | nova-cell-setup-1549202400-c9vfz 0/1 Completed 0 29m |

||

| + | nova-cell-setup-dfdzw 0/1 Completed 0 2h |

||

| + | nova-compute-default-fmqtl 1/1 Running 0 2h |

||

| + | nova-conductor-5b9956bffc-5ts7s 1/1 Running 0 2h |

||

| + | nova-consoleauth-7f8dbb8865-lt5mr 1/1 Running 0 2h |

||

| + | nova-db-init-hjp2p 0/3 Completed 0 2h |

||

| + | nova-db-sync-zn6px 0/1 Completed 0 2h |

||

| + | nova-ks-endpoints-ldzhz 0/3 Completed 0 2h |

||

| + | nova-ks-service-c64tb 0/1 Completed 0 2h |

||

| + | nova-ks-user-kjskm 0/1 Completed 0 2h |

||

| + | nova-novncproxy-6f485d9f4c-6m2n5 1/1 Running 0 2h |

||

| + | nova-placement-api-587c888875-6cmmb 1/1 Running 0 2h |

||

| + | nova-rabbit-init-t275g 0/1 Completed 0 2h |

||

| + | nova-scheduler-69886c6fdf-hcwm6 1/1 Running 0 2h |

||

| + | nova-service-cleaner-1549195200-7jw2d 0/1 Completed 1 2h |

||

| + | nova-service-cleaner-1549198800-pvckn 0/1 Completed 0 1h |

||

| + | nova-service-cleaner-1549202400-kqpxz 0/1 Completed 0 29m |

||

| + | openvswitch-db-nx579 1/1 Running 0 2h |

||

| + | openvswitch-vswitchd-p4xj5 1/1 Running 0 2h |

||

| + | placement-ks-endpoints-vt4pk 0/3 Completed 0 2h |

||

| + | placement-ks-service-sw2b9 0/1 Completed 0 2h |

||

| + | placement-ks-user-zv755 0/1 Completed 0 2h |

||

| + | rabbitmq-rabbitmq-0 1/1 Running 0 4h |

||

| + | swift-ks-user-ktptt 0/1 Completed 0 3h |

||

</PRE> |

</PRE> |

||

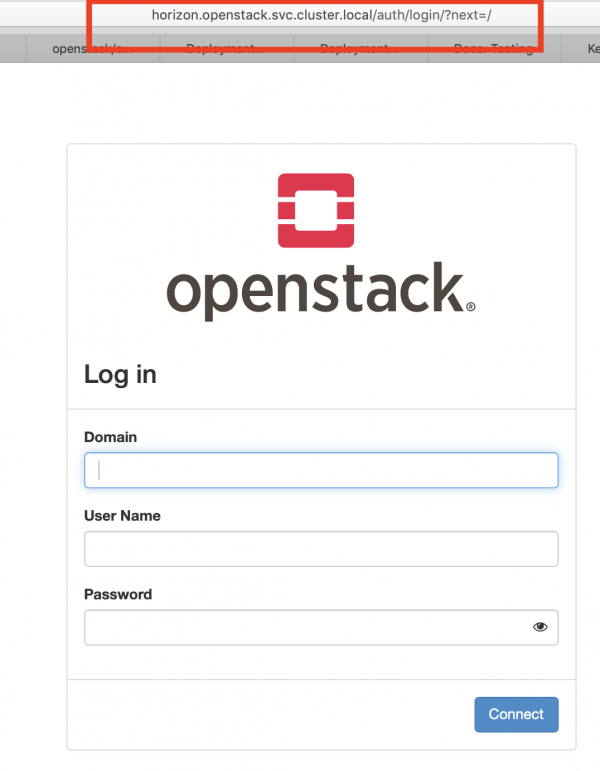

| + | ===Доступ к Horizon=== |

||

| + | (настройки посмотрел в ингрессе, 10.255.57.3 - Адрес виртуальной машины |

||

<PRE> |

<PRE> |

||

| + | cat /etc/hosts |

||

| − | helm list |

||

| + | 10.255.57.3 os horizon.openstack.svc.cluster.local |

||

</PRE> |

</PRE> |

||

| + | [[Изображение:Horizon first login.png|600px]] |

||

| + | ===Конфигурация KeyStone=== |

||

| + | В задании сказано: |

||

<PRE> |

<PRE> |

||

| + | change Keystone token expiration time afterwards to 24 hours |

||

| − | helm repo update |

||

| − | Hang tight while we grab the latest from your chart repositories... |

||

| − | ...Skip local chart repository |

||

| − | ...Successfully got an update from the "stable" chart repository |

||

| − | Update Complete. ⎈ Happy Helming!⎈ |

||

</PRE> |

</PRE> |

||

| + | Первое - проверим что там на самом деле |

||

| + | <PRE> |

||

| + | openstack token issue |

||

| + | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ |

||

| + | | Field | Value | |

||

| + | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ |

||

| + | | expires | 2019-02-04T00:25:34+0000 | |

||

| + | | id | gAAAAABcVt2-s8ugiwKaNQiA9djycTJ2CoDZ0sC176e54cjnE0RevPsXkgiZH0U5m_kNQlo0ctunA_TvD1tULyn0ckRkrO0Pxht1yT-cQ1TTidhkJR2sVojcXG3hiau0RMm0YOfoydDemyuvGMS7mwZ_Z2m9VtmJ-F83xQ8CwEfhItH6vRMzmGk | |

||

| + | | project_id | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | |

||

| + | | user_id | 42068c166a3245208b5ac78965eab80b | |

||

| + | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ |

||

| + | </PRE> |

||

| + | Похоже что TTL=12h <BR> |

||

| + | Быстрое чтение документации ( https://docs.openstack.org/juno/config-reference/content/keystone-configuration-file.html ) привело меня к мысли что нужно менять секцию |

||

| + | <PRE> |

||

| + | [token] |

||

| + | expiration = 3600 |

||

| + | </PRE> |

||

| + | <BR> |

||

| + | Тут было принято решение сделать "быстро и грязно" и в реальном мире так скорее всего не выйдет, |

||

| + | 1. Посчитать новое значение (вместо 24 вбил по ошибке 34) |

||

| − | =Ceph= |

||

| + | <PRE> |

||

| − | * http://docs.ceph.com/docs/mimic/start/kube-helm/ |

||

| + | bc |

||

| + | bc 1.07.1 |

||

| + | Copyright 1991-1994, 1997, 1998, 2000, 2004, 2006, 2008, 2012-2017 Free Software Foundation, Inc. |

||

| + | This is free software with ABSOLUTELY NO WARRANTY. |

||

| + | For details type `warranty'. |

||

| + | 34*60*60 |

||

| + | 122400 |

||

| + | quit |

||

| + | </PRE> |

||

| + | 2. Проверить что у нас один экземпляр keystone |

||

| + | <BR><I> Было б забавно если их было б больше и все выдавали токены с разным TTL </I> |

||

<PRE> |

<PRE> |

||

| + | docker ps | grep keystone |

||

| − | helm serve & |

||

| + | 41e785977105 16ec948e619f "/tmp/keystone-api.s…" 2 hours ago Up 2 hours k8s_keystone-api_keystone-api-f658f747c-q6w65_openstack_8ca3a9ed-279f-11e9-a72e-080027da2b2f_0 |

||

| − | [1] 35959 |

||

| + | 6905400831ad k8s.gcr.io/pause-amd64:3.1 "/pause" 2 hours ago Up 2 hours k8s_POD_keystone-api-f658f747c-q6w65_openstack_8ca3a9ed-279f-11e9-a72e-080027da2b2f_0 |

||

| − | 14:16:09-mmazur@Mac18:~/WORK_OTHER/Mirantis_test_task/ceph-helm$ Regenerating index. This may take a moment. |

||

| + | </PRE> |

||

| − | Now serving you on 127.0.0.1:8879 |

||

| + | Тут конечно нужно <B>kubectl exec ...</B> но я решил срезать угол |

||

| + | <PRE> |

||

| + | docker exec -u root -ti 41e785977105 bash |

||

| + | </PRE> |

||

| + | Проверяю что запущено |

||

| + | <PRE> |

||

| + | ps -auxfw |

||

| + | USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND |

||

| + | root 566 0.0 0.0 18236 3300 pts/0 Ss 12:34 0:00 bash |

||

| + | root 581 0.0 0.0 34428 2872 pts/0 R+ 12:36 0:00 \_ ps -auxfw |

||

| + | keystone 1 0.0 1.1 263112 185104 ? Ss 10:42 0:01 apache2 -DFOREGROUND |

||

| + | keystone 11 3.5 0.5 618912 95016 ? Sl 10:42 4:03 (wsgi:k -DFOREGROUND |

||

| + | keystone 478 0.1 0.0 555276 9952 ? Sl 12:23 0:00 apache2 -DFOREGROUND |

||

| + | keystone 506 0.2 0.0 555348 9956 ? Sl 12:24 0:01 apache2 -DFOREGROUND |

||

| + | </PRE> |

||

| + | Соответствует ожиданиям . |

||

| + | <BR> |

||

| + | Содержимое файла /etc/keystone.keystone.conf тоже - там как и предполагалось 12h |

||

| + | <PRE> |

||

| + | [token] |

||

| + | expiration = 43200 |

||

| + | </PRE> |

||

| + | Поменять файл прямо на месте не удалось - что б уже все сделать не по правилам, изменил его снаружи на хосте |

||

| + | <PRE> |

||

| + | root@openstack:~# find /var -name keystone.conf |

||

| + | /var/lib/kubelet/pods/8ca3a9ed-279f-11e9-a72e-080027da2b2f/volumes/kubernetes.io~empty-dir/etckeystone/keystone.conf |

||

| + | /var/lib/kubelet/pods/8ca3a9ed-279f-11e9-a72e-080027da2b2f/volumes/kubernetes.io~secret/keystone-etc/..2019_02_03_12_37_10.041243569/keystone.conf |

||

| + | /var/lib/kubelet/pods/8ca3a9ed-279f-11e9-a72e-080027da2b2f/volumes/kubernetes.io~secret/keystone-etc/keystone.conf |

||

| + | </PRE> |

||

| + | и, возблагодарив разработчиков за то что keystone запущен под Apache (что позволило сделать релоад а не пересоздавать контейнер, а я не был уверен что знаю как это сделать правильно) |

||

| + | <PRE> |

||

| + | docker exec -u root -ti 41e785977105 bash |

||

| + | </PRE> |

||

| + | <PRE> |

||

| + | ps -auxfw |

||

| + | USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND |

||

| + | root 566 0.0 0.0 18236 3300 pts/0 Ss 12:34 0:00 bash |

||

| + | root 581 0.0 0.0 34428 2872 pts/0 R+ 12:36 0:00 \_ ps -auxfw |

||

| + | keystone 1 0.0 1.1 263112 185104 ? Ss 10:42 0:01 apache2 -DFOREGROUND |

||

| + | keystone 11 3.5 0.5 618912 95016 ? Sl 10:42 4:03 (wsgi:k -DFOREGROUND |

||

| + | keystone 478 0.1 0.0 555276 9952 ? Sl 12:23 0:00 apache2 -DFOREGROUND |

||

| + | keystone 506 0.2 0.0 555348 9956 ? Sl 12:24 0:01 apache2 -DFOREGROUND |

||

| + | root@keystone-api-f658f747c-q6w65:/etc/keystone# kill -HUP 1 |

||

</PRE> |

</PRE> |

||

<PRE> |

<PRE> |

||

| + | root@keystone-api-f658f747c-q6w65:/etc/keystone# ps -auxfw |

||

| − | helm repo add local http://localhost:8879/charts |

||

| + | USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND |

||

| − | "local" has been added to your repositories |

||

| + | root 566 0.0 0.0 18236 3300 pts/0 Ss 12:34 0:00 bash |

||

| + | root 583 0.0 0.0 34428 2888 pts/0 R+ 12:36 0:00 \_ ps -auxfw |

||

| + | keystone 1 0.0 1.1 210588 183004 ? Ss 10:42 0:01 apache2 -DFOREGROUND |

||

| + | keystone 11 3.5 0.0 0 0 ? Z 10:42 4:03 [apache2] <defunct> |

||

| + | root@keystone-api-f658f747c-q6w65:/etc/keystone# ps -auxfw |

||

| + | USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND |

||

| + | root 566 0.0 0.0 18236 3300 pts/0 Ss 12:34 0:00 bash |

||

| + | root 955 0.0 0.0 34428 2904 pts/0 R+ 12:36 0:00 \_ ps -auxfw |

||

| + | keystone 1 0.0 1.1 263120 185124 ? Ss 10:42 0:01 apache2 -DFOREGROUND |

||

| + | keystone 584 12.0 0.0 290680 8820 ? Sl 12:36 0:00 (wsgi:k -DFOREGROUND |

||

| + | keystone 585 14.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 586 14.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 587 17.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 588 13.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 589 14.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 590 10.0 0.0 555188 10020 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 591 12.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 592 10.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 593 15.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 594 14.0 0.0 265528 8572 ? R 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 595 13.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 596 11.0 0.0 266040 8832 ? R 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 597 19.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 598 14.0 0.0 555188 9956 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 599 18.0 0.0 555188 9952 ? Sl 12:36 0:00 apache2 -DFOREGROUND |

||

| + | keystone 600 11.0 0.0 265528 8376 ? R 12:36 0:00 apache2 -DFOREGROUND |

||

</PRE> |

</PRE> |

||

| + | Проверяю применились ли изменения: |

||

<PRE> |

<PRE> |

||

| + | openstack token issue |

||

| − | git clone https://github.com/ceph/ceph-helm |

||

| + | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ |

||

| − | cd ceph-helm/ceph |

||

| + | | Field | Value | |

||

| + | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ |

||

| + | | expires | 2019-02-04T22:37:10+0000 | |

||

| + | | id | gAAAAABcVuB2tQtAX56G1_kqJKeekpsWDJPTE19IMhWvNlGQqmDZQap9pgXQQkhQNMQNpR7Q6XR_w5_ngsx_l36vKXUND75uy4fimAbaLBDBdxxOzJqDRq4NLz4sEdTzLs2T3nyISwItLloOj-8sw7x1Pg2-9N-9afudv_jcYLVCq2luAImfRpY | |

||

| + | | project_id | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | |

||

| + | | user_id | 42068c166a3245208b5ac78965eab80b | |

||

| + | +------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ |

||

| + | root@openstack:/var/lib/kubelet/pods/8ca3a9ed-279f-11e9-a72e-080027da2b2f/volumes/kubernetes.io~secret/keystone-etc/..data# date |

||

| + | Sun Feb 3 12:37:18 UTC 2019 |

||

</PRE> |

</PRE> |

||

| + | 34 часа (из-за опечатки) но менять на 24 я уже не стал |

||

| + | ===Heat Deploy=== |

||

| + | <B> deploy 3 VMs connected to each other using heat</B> |

||

| + | |||

| + | Самая простая часть - все написано документации https://docs.openstack.org/openstack-helm/latest/install/developer/exercise-the-cloud.html |

||

| + | <BR> У меня с первого раза не создалась ВМка |

||

<PRE> |

<PRE> |

||

| + | [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] Instance failed to spawn |

||

| − | make |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] Traceback (most recent call last): |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/compute/manager.py", line 2133, in _build_resources |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] yield resources |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/compute/manager.py", line 1939, in _build_and_run_instance |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] block_device_info=block_device_info) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 2786, in spawn |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] block_device_info=block_device_info) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 3193, in _create_image |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] fallback_from_host) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 3309, in _create_and_inject_local_root |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] instance, size, fallback_from_host) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/driver.py", line 6953, in _try_fetch_image_cache |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] size=size) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/imagebackend.py", line 242, in cache |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] *args, **kwargs) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/imagebackend.py", line 584, in create_image |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] prepare_template(target=base, *args, **kwargs) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/oslo_concurrency/lockutils.py", line 271, in inner |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] return f(*args, **kwargs) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/imagebackend.py", line 238, in fetch_func_sync |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] fetch_func(target=target, *args, **kwargs) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/libvirt/utils.py", line 458, in fetch_image |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] images.fetch_to_raw(context, image_id, target) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/images.py", line 132, in fetch_to_raw |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] fetch(context, image_href, path_tmp) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/virt/images.py", line 123, in fetch |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] IMAGE_API.download(context, image_href, dest_path=path) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/image/api.py", line 184, in download |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] dst_path=dest_path) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/image/glance.py", line 533, in download |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] _reraise_translated_image_exception(image_id) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/image/glance.py", line 1050, in _reraise_translated_image_exception |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] six.reraise(type(new_exc), new_exc, exc_trace) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/image/glance.py", line 531, in download |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] image_chunks = self._client.call(context, 2, 'data', image_id) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/nova/image/glance.py", line 168, in call |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] result = getattr(controller, method)(*args, **kwargs) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/glanceclient/common/utils.py", line 535, in inner |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] return RequestIdProxy(wrapped(*args, **kwargs)) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/glanceclient/v2/images.py", line 208, in data |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] resp, body = self.http_client.get(url) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/glanceclient/common/http.py", line 285, in get |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] return self._request('GET', url, **kwargs) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/glanceclient/common/http.py", line 277, in _request |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] resp, body_iter = self._handle_response(resp) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] File "/var/lib/openstack/local/lib/python2.7/site-packages/glanceclient/common/http.py", line 107, in _handle_response |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] raise exc.from_response(resp, resp.content) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] HTTPInternalServerError: HTTPInternalServerError (HTTP 500) |

||

| + | 2019-02-03 13:20:56,466.466 21157 ERROR nova.compute.manager [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] |

||

| + | 2019-02-03 13:21:22,418.418 21157 INFO nova.compute.resource_tracker [req-cdb3800a-87ba-4ee9-88ad-e6914522a847 - - - - -] Final resource view: name=openstack phys_ram=16039MB used_ram=576MB phys_disk=48GB used_disk=1GB total_vcpus=8 used_vcpus=1 pci_stats=[] |

||

| + | 2019-02-03 13:21:27,224.224 21157 INFO nova.compute.manager [req-c0895961-b263-4122-82cc-5267be0aad8f 42068c166a3245208b5ac78965eab80b 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 - - -] [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] Terminating instance |

||

| + | 2019-02-03 13:21:27,332.332 21157 INFO nova.virt.libvirt.driver [-] [instance: 6aa5979e-1e03-4c8c-92bf-b1c1a43022ad] Instance destroyed successfully. |

||

| + | </PRE> |

||

| + | Так как я подозревал что проблема в тормозах |

||

| − | ===== Processing [helm-toolkit] chart ===== |

||

| + | <PRE> |

||

| − | if [ -f helm-toolkit/Makefile ]; then make --directory=helm-toolkit; fi |

||

| + | 2019-02-03 12:57:35,835.835 21157 WARNING nova.scheduler.client.report [req-cdb3800a-87ba-4ee9-88ad-e6914522a847 - - - - -] Failed to update inventory for resource provider 57daad8e-d831-4271-b3ef-332237d32b49: 503 503 Service Unavailable |

||

| − | find: secrets: No such file or directory |

||

| + | The server is currently unavailable. Please try again at a later time. |

||

| − | echo Generating /Users/mmazur/WORK_OTHER/Mirantis_test_task/ceph-helm/ceph/helm-toolkit/templates/_secrets.tpl |

||

| + | </PRE> |

||

| − | Generating /Users/mmazur/WORK_OTHER/Mirantis_test_task/ceph-helm/ceph/helm-toolkit/templates/_secrets.tpl |

||

| + | то просто зачистил stack и закоментировал а скрипте создание сетей |

||

| − | rm -f templates/_secrets.tpl |

||

| + | После чего VM-ка успешно создалась |

||

| − | for i in ; do printf '{{ define "'$i'" }}' >> templates/_secrets.tpl; cat $i >> templates/_secrets.tpl; printf "{{ end }}\n" >> templates/_secrets.tpl; done |

||

| + | <BR> |

||

| − | if [ -f helm-toolkit/requirements.yaml ]; then helm dependency update helm-toolkit; fi |

||

| + | <PRE> |

||

| − | Hang tight while we grab the latest from your chart repositories... |

||

| + | openstack server list |

||

| − | ...Successfully got an update from the "local" chart repository |

||

| + | +--------------------------------------+----------------------------------------------+--------+------------------------------------------------------------------------+---------------------+----------------------------------------------+ |

||

| − | ...Successfully got an update from the "stable" chart repository |

||

| + | | ID | Name | Status | Networks | Image | Flavor | |

||

| − | Update Complete. ⎈Happy Helming!⎈ |

||

| + | +--------------------------------------+----------------------------------------------+--------+------------------------------------------------------------------------+---------------------+----------------------------------------------+ |

||

| − | Saving 0 charts |

||

| + | | 155405cd-011a-42a2-93d7-3ed6eda250b2 | heat-basic-vm-deployment-server-ynxjzrycsd3z | ACTIVE | heat-basic-vm-deployment-private_net-tbltedh44qjv=10.0.0.4, 172.24.4.5 | Cirros 0.3.5 64-bit | heat-basic-vm-deployment-flavor-3kbmengg2bkm | |

||

| − | Deleting outdated charts |

||

| + | +--------------------------------------+----------------------------------------------+--------+------------------------------------------------------------------------+---------------------+----------------------------------------------+ |

||

| − | if [ -d helm-toolkit ]; then helm lint helm-toolkit; fi |

||

| + | </PRE> |

||

| − | ==> Linting helm-toolkit |

||

| + | <PRE> |

||

| − | [INFO] Chart.yaml: icon is recommended |

||

| + | root@openstack:~# openstack stack list |

||

| + | +--------------------------------------+-----------------------------+----------------------------------+-----------------+----------------------+--------------+ |

||

| + | | ID | Stack Name | Project | Stack Status | Creation Time | Updated Time | |

||

| + | +--------------------------------------+-----------------------------+----------------------------------+-----------------+----------------------+--------------+ |

||

| + | | 1f90d25b-eb19-48cd-b623-cc0c7bccc28f | heat-vm-volume-attach | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:36:15Z | None | |

||

| + | | 8d4ce486-ddb0-4826-b225-6b7dc4eef157 | heat-basic-vm-deployment | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:35:11Z | None | |

||

| + | | d0aeea69-4639-4942-a905-ec30ed99aa47 | heat-subnet-pool-deployment | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:16:29Z | None | |

||

| + | | 688585e9-9b99-4ac7-bd04-9e7b874ec6c7 | heat-public-net-deployment | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:16:09Z | None | |

||

| + | +--------------------------------------+-----------------------------+----------------------------------+-----------------+----------------------+--------------+ |

||

| + | </PRE> |

||

| + | Теперь нужно создать три ВМки и проверить связь |

||

| − | 1 chart(s) linted, no failures |

||

| + | <BR> |

||

| − | if [ -d helm-toolkit ]; then helm package helm-toolkit; fi |

||

| + | Что б не делать это руками воспользовался тем же скриптом, дополнив его <B>for I in $(seq 1 3); do</B> |

||

| − | Successfully packaged chart and saved it to: /Users/mmazur/WORK_OTHER/Mirantis_test_task/ceph-helm/ceph/helm-toolkit-0.1.0.tgz |

||

| + | <PRE> |

||

| + | for I in $(seq 1 3); do |

||

| + | openstack stack create --wait \ |

||

| + | --parameter public_net=${OSH_EXT_NET_NAME} \ |

||

| + | --parameter image="${IMAGE_NAME}" \ |

||

| + | --parameter ssh_key=${OSH_VM_KEY_STACK} \ |

||

| + | --parameter cidr=${OSH_PRIVATE_SUBNET} \ |

||

| + | --parameter dns_nameserver=${OSH_BR_EX_ADDR%/*} \ |

||

| + | -t ./tools/gate/files/heat-basic-vm-deployment.yaml \ |

||

| + | heat-basic-vm-deployment-${I} |

||

| + | ... |

||

| + | </PRE> |

||

| + | Полученный результат |

||

| + | <PRE> |

||

| + | # openstack stack list |

||

| + | +--------------------------------------+-----------------------------+----------------------------------+-----------------+----------------------+--------------+ |

||

| + | | ID | Stack Name | Project | Stack Status | Creation Time | Updated Time | |

||

| + | +--------------------------------------+-----------------------------+----------------------------------+-----------------+----------------------+--------------+ |

||

| + | | a6f1e35e-7536-4707-bdfa-b2885ab7cae2 | heat-vm-volume-attach-3 | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:48:31Z | None | |

||

| + | | ccb63a87-37f4-4355-b399-ef4abb43983b | heat-basic-vm-deployment-3 | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:47:25Z | None | |

||

| + | | 6d61b8de-80cd-4138-bfe2-8333a4b354ce | heat-vm-volume-attach-2 | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:47:12Z | None | |

||

| + | | 75c44f1c-d8da-422e-a027-f16b8458e224 | heat-basic-vm-deployment-2 | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:46:08Z | None | |

||

| + | | 95da63ac-9e20-4492-b3a6-fab74649bbf9 | heat-vm-volume-attach-1 | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:45:54Z | None | |

||

| + | | 447881bb-6c93-4b92-9765-578782ee2ef5 | heat-basic-vm-deployment-1 | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:44:42Z | None | |

||

| + | | 1f90d25b-eb19-48cd-b623-cc0c7bccc28f | heat-vm-volume-attach | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:36:15Z | None | |

||

| + | | 8d4ce486-ddb0-4826-b225-6b7dc4eef157 | heat-basic-vm-deployment | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:35:11Z | None | |

||

| + | | d0aeea69-4639-4942-a905-ec30ed99aa47 | heat-subnet-pool-deployment | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:16:29Z | None | |

||

| + | | 688585e9-9b99-4ac7-bd04-9e7b874ec6c7 | heat-public-net-deployment | 2cb7f2c19a6f4e148bc3f9d0d0b7ed44 | CREATE_COMPLETE | 2019-02-03T13:16:09Z | None | |

||

| + | +--------------------------------------+-----------------------------+----------------------------------+-----------------+----------------------+--------------+ |

||

| + | </PRE> |

||

| + | <PRE> |

||

| + | openstack server list |

||

| + | +--------------------------------------+------------------------------------------------+--------+---------------------------------------------------------------------------+---------------------+------------------------------------------------+ |

||

| + | | ID | Name | Status | Networks | Image | Flavor | |

||

| + | +--------------------------------------+------------------------------------------------+--------+---------------------------------------------------------------------------+---------------------+------------------------------------------------+ |

||

| + | | 412cbd8b-e4c1-46e8-b48c-065e9830bfa8 | heat-basic-vm-deployment-3-server-v5lwzoyotkwo | ACTIVE | heat-basic-vm-deployment-3-private_net-4unttrj2lq6z=10.0.0.6, 172.24.4.18 | Cirros 0.3.5 64-bit | heat-basic-vm-deployment-3-flavor-3gncn5vwfu6z | |

||

| + | | e7a4e42c-aa9c-47bc-ba7b-d229af1a2077 | heat-basic-vm-deployment-2-server-vhacv5jz7dnt | ACTIVE | heat-basic-vm-deployment-2-private_net-2gz44w5rjy7s=10.0.0.6, 172.24.4.11 | Cirros 0.3.5 64-bit | heat-basic-vm-deployment-2-flavor-hxr5eawiveg5 | |

||

| + | | 52886edd-be09-4ba1-aebd-5563f25f4f60 | heat-basic-vm-deployment-1-server-wk5lxhhnhhyn | ACTIVE | heat-basic-vm-deployment-1-private_net-hqx3dmohj3n5=10.0.0.5, 172.24.4.12 | Cirros 0.3.5 64-bit | heat-basic-vm-deployment-1-flavor-6aiokzvf4qaq | |

||

| + | | 155405cd-011a-42a2-93d7-3ed6eda250b2 | heat-basic-vm-deployment-server-ynxjzrycsd3z | ACTIVE | heat-basic-vm-deployment-private_net-tbltedh44qjv=10.0.0.4, 172.24.4.5 | Cirros 0.3.5 64-bit | heat-basic-vm-deployment-flavor-3kbmengg2bkm | |

||

| + | +--------------------------------------+------------------------------------------------+--------+---------------------------------------------------------------------------+---------------------+------------------------------------------------+ |

||

| + | </PRE> |

||

| + | Проверка сети(22 порт точно открыт): |

||

| + | <PRE> |

||

| + | root@openstack:/etc/openstack# ssh -i /root/.ssh/osh_key cirros@172.24.4.18 |

||

| + | $ nc 172.24.4.11 22 |

||

| − | ===== Processing [ceph] chart ===== |

||

| + | SSH-2.0-dropbear_2012.55 |

||

| − | if [ -f ceph/Makefile ]; then make --directory=ceph; fi |

||

| + | ^Cpunt! |

||

| − | if [ -f ceph/requirements.yaml ]; then helm dependency update ceph; fi |

||

| − | Hang tight while we grab the latest from your chart repositories... |

||

| − | ...Successfully got an update from the "local" chart repository |

||

| − | ...Successfully got an update from the "stable" chart repository |

||

| − | Update Complete. ⎈Happy Helming!⎈ |

||

| − | Saving 1 charts |

||

| − | Downloading helm-toolkit from repo http://localhost:8879/charts |

||

| − | Deleting outdated charts |

||

| − | if [ -d ceph ]; then helm lint ceph; fi |

||

| − | ==> Linting ceph |

||

| − | [INFO] Chart.yaml: icon is recommended |

||

| + | $ nc 172.24.4.12 22 |

||

| − | 1 chart(s) linted, no failures |

||

| + | SSH-2.0-dropbear_2012.55 |

||

| − | if [ -d ceph ]; then helm package ceph; fi |

||

| + | ^Cpunt! |

||

| − | Successfully packaged chart and saved it to: /Users/mmazur/WORK_OTHER/Mirantis_test_task/ceph-helm/ceph/ceph-0.1.0.tgz |

||

| + | |||

| + | $ |

||

</PRE> |

</PRE> |

||

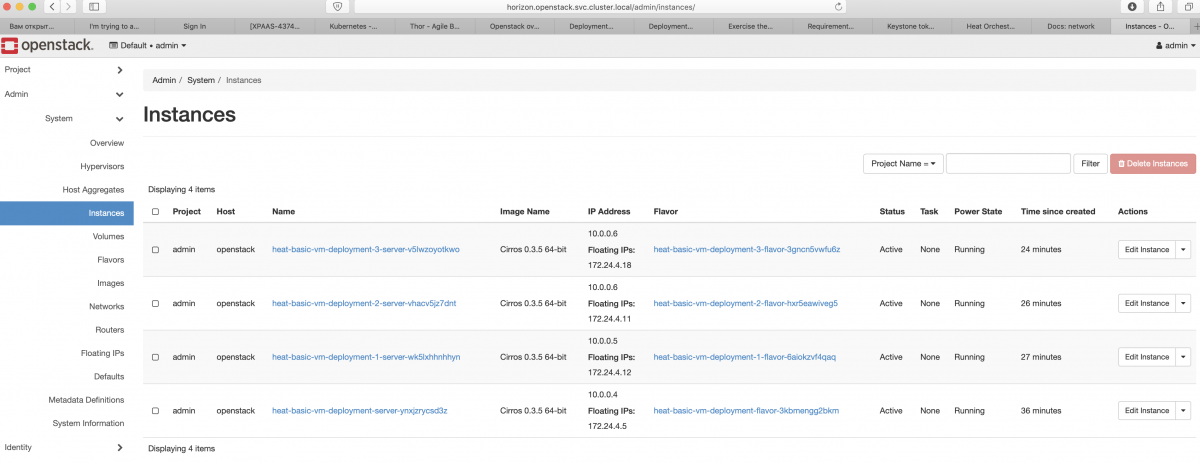

| + | ===Horizon with VMs=== |

||

| + | [[Изображение:Horizon With VMs.png|1200px]] |

||

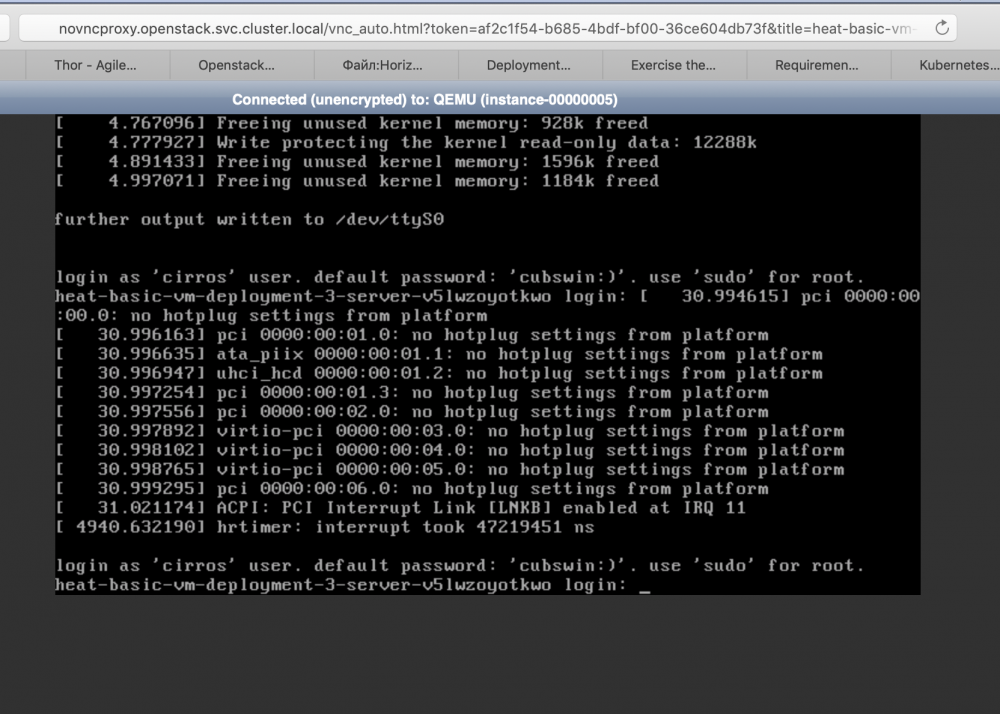

| + | <BR>Удивительно но заработала даже VNC консоль |

||

| + | <BR> |

||

| + | [[Изображение:Horizon VNC.png|1000px]] |

||

| + | ===Logs=== |

||

| + | ====MariaDB==== |

||

| + | <PRE> |

||

| + | + ./tools/deployment/common/wait-for-pods.sh openstack |

||

| + | + helm status mariadb |

||

| + | LAST DEPLOYED: Sun Feb 3 10:25:00 2019 |

||

| + | NAMESPACE: openstack |

||

| + | STATUS: DEPLOYED |

||

| + | RESOURCES: |

||

| − | * https://github.com/ceph/ceph-helm |

||

| + | ==> v1beta1/PodDisruptionBudget |

||

| + | NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE |

||

| + | mariadb-server 0 N/A 1 13h |

||

| + | ==> v1/ConfigMap |

||

| + | NAME DATA AGE |

||

| + | mariadb-bin 5 13h |

||

| + | mariadb-etc 5 13h |

||

| + | mariadb-services-tcp 1 13h |

||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | mariadb-ingress-error-pages 1 13h |

||

| + | mariadb-ingress 1 13h |

||

| + | mariadb-mariadb 1 13h |

||

| + | |||

| + | ==> v1beta1/RoleBinding |

||

| + | NAME AGE |

||

| + | mariadb-mariadb-ingress 13h |

||

| + | mariadb-ingress 13h |

||

| + | mariadb-mariadb 13h |

||

| + | |||

| + | ==> v1/Service |

||

| + | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

||

| + | mariadb-discovery ClusterIP None <none> 3306/TCP,4567/TCP 13h |

||

| + | mariadb-ingress-error-pages ClusterIP None <none> 80/TCP 13h |

||

| + | mariadb ClusterIP 10.104.164.168 <none> 3306/TCP 13h |

||

| + | mariadb-server ClusterIP 10.107.255.234 <none> 3306/TCP 13h |

||

| + | |||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | mariadb-netpol application=mariadb 13h |

||

| + | |||

| + | ==> v1/Secret |

||

| + | NAME TYPE DATA AGE |

||

| + | mariadb-dbadmin-password Opaque 1 13h |

||

| + | mariadb-secrets Opaque 1 13h |

||

| + | |||

| + | ==> v1beta1/Role |

||

| + | NAME AGE |

||

| + | mariadb-ingress 13h |

||

| + | mariadb-openstack-mariadb-ingress 13h |

||

| + | mariadb-mariadb 13h |

||

| + | |||

| + | ==> v1/Deployment |

||

| + | NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE |

||

| + | mariadb-ingress-error-pages 1 1 1 1 13h |

||

| + | mariadb-ingress 2 2 2 2 13h |

||

| + | |||

| + | ==> v1/StatefulSet |

||

| + | NAME DESIRED CURRENT AGE |

||

| + | mariadb-server 1 1 13h |

||

| + | |||

| + | ==> v1/Pod(related) |

||

| + | NAME READY STATUS RESTARTS AGE |

||

| + | mariadb-ingress-error-pages-5c89b57bc-twn7z 1/1 Running 0 13h |

||

| + | mariadb-ingress-5cff98cbfc-24vjg 1/1 Running 0 13h |

||

| + | mariadb-ingress-5cff98cbfc-nqlhq 1/1 Running 0 13h |

||

| + | mariadb-server-0 1/1 Running 0 13h |

||

| + | |||

| + | |||

| + | </PRE> |

||

| + | ====RabbitMQ==== |

||

<PRE> |

<PRE> |

||

| + | + helm upgrade --install rabbitmq ../openstack-helm-infra/rabbitmq --namespace=openstack --values=/tmp/rabbitmq.yaml --set pod.replicas.server=1 |

||

| − | kubectl describe node minikube |

||

| + | Release "rabbitmq" does not exist. Installing it now. |

||

| − | Name: minikube |

||

| + | NAME: rabbitmq |

||

| − | Roles: master |

||

| + | LAST DEPLOYED: Sun Feb 3 10:27:01 2019 |

||

| − | Labels: beta.kubernetes.io/arch=amd64 |

||

| + | NAMESPACE: openstack |

||

| − | beta.kubernetes.io/os=linux |

||

| + | STATUS: DEPLOYED |

||

| − | kubernetes.io/hostname=minikube |

||

| + | |||

| − | node-role.kubernetes.io/master= |

||

| + | RESOURCES: |

||

| + | ==> v1/Service |

||

| + | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

||

| + | rabbitmq-dsv-7b1733 ClusterIP None <none> 5672/TCP,25672/TCP,15672/TCP 2s |

||

| + | rabbitmq-mgr-7b1733 ClusterIP 10.111.11.128 <none> 80/TCP,443/TCP 2s |

||

| + | rabbitmq ClusterIP 10.108.248.80 <none> 5672/TCP,25672/TCP,15672/TCP 2s |

||

| + | |||

| + | ==> v1/StatefulSet |

||

| + | NAME DESIRED CURRENT AGE |

||

| + | rabbitmq-rabbitmq 1 1 2s |

||

| + | |||

| + | ==> v1beta1/Ingress |

||

| + | NAME HOSTS ADDRESS PORTS AGE |

||

| + | rabbitmq-mgr-7b1733 rabbitmq-mgr-7b1733,rabbitmq-mgr-7b1733.openstack,rabbitmq-mgr-7b1733.openstack.svc.cluster.local 80 2s |

||

| + | |||

| + | ==> v1/Pod(related) |

||

| + | NAME READY STATUS RESTARTS AGE |

||

| + | rabbitmq-rabbitmq-0 0/1 Pending 0 2s |

||

| + | |||

| + | ==> v1/ConfigMap |

||

| + | NAME DATA AGE |

||

| + | rabbitmq-rabbitmq-bin 4 3s |

||

| + | rabbitmq-rabbitmq-etc 2 2s |

||

| + | |||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | rabbitmq-test 1 2s |

||

| + | rabbitmq-rabbitmq 1 2s |

||

| + | |||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | rabbitmq-netpol application=rabbitmq 2s |

||

| + | |||

| + | ==> v1beta1/Role |

||

| + | NAME AGE |

||

| + | rabbitmq-openstack-rabbitmq-test 2s |

||

| + | rabbitmq-rabbitmq 2s |

||

| + | |||

| + | ==> v1beta1/RoleBinding |

||

| + | NAME AGE |

||

| + | rabbitmq-rabbitmq-test 2s |

||

| + | rabbitmq-rabbitmq 2s |

||

| + | |||

| + | |||

| + | + ./tools/deployment/common/wait-for-pods.sh openstack |

||

| + | + helm status rabbitmq |

||

| + | LAST DEPLOYED: Sun Feb 3 10:27:01 2019 |

||

| + | NAMESPACE: openstack |

||

| + | STATUS: DEPLOYED |

||

| + | |||

| + | RESOURCES: |

||

| + | ==> v1/Service |

||

| + | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

||

| + | rabbitmq-dsv-7b1733 ClusterIP None <none> 5672/TCP,25672/TCP,15672/TCP 2m21s |

||

| + | rabbitmq-mgr-7b1733 ClusterIP 10.111.11.128 <none> 80/TCP,443/TCP 2m21s |

||

| + | rabbitmq ClusterIP 10.108.248.80 <none> 5672/TCP,25672/TCP,15672/TCP 2m21s |

||

| + | |||

| + | ==> v1beta1/Ingress |

||

| + | NAME HOSTS ADDRESS PORTS AGE |

||

| + | rabbitmq-mgr-7b1733 rabbitmq-mgr-7b1733,rabbitmq-mgr-7b1733.openstack,rabbitmq-mgr-7b1733.openstack.svc.cluster.local 80 2m21s |

||

| + | |||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | rabbitmq-netpol application=rabbitmq 2m21s |

||

| + | |||

| + | ==> v1/ConfigMap |

||

| + | NAME DATA AGE |

||

| + | rabbitmq-rabbitmq-bin 4 2m22s |

||

| + | rabbitmq-rabbitmq-etc 2 2m21s |

||

| + | |||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | rabbitmq-test 1 2m21s |

||

| + | rabbitmq-rabbitmq 1 2m21s |

||

| + | |||

| + | ==> v1beta1/RoleBinding |

||

| + | NAME AGE |

||

| + | rabbitmq-rabbitmq-test 2m21s |

||

| + | rabbitmq-rabbitmq 2m21s |

||

| + | |||

| + | ==> v1beta1/Role |

||

| + | NAME AGE |

||

| + | rabbitmq-openstack-rabbitmq-test 2m21s |

||

| + | rabbitmq-rabbitmq 2m21s |

||

| + | |||

| + | ==> v1/StatefulSet |

||

| + | NAME DESIRED CURRENT AGE |

||

| + | rabbitmq-rabbitmq 1 1 2m21s |

||

| + | |||

| + | ==> v1/Pod(related) |

||

| + | NAME READY STATUS RESTARTS AGE |

||

| + | rabbitmq-rabbitmq-0 1/1 Running 0 2m21s |

||

</PRE> |

</PRE> |

||

| + | |||

<PRE> |

<PRE> |

||

| + | root@openstack:~/mira/openstack-helm# echo $? |

||

| − | kubectl label node minikube ceph-mon=enabled ceph-mgr=enabled ceph-osd=enabled ceph-osd-device-dev-sdb=enabled ceph-osd-device-dev-sdc=enabled |

||

| + | 0 |

||

</PRE> |

</PRE> |

||

| + | |||

| + | ====Memcached==== |

||

<PRE> |

<PRE> |

||

| + | |||

| − | kubectl describe node minikube |

||

| + | + helm upgrade --install memcached ../openstack-helm-infra/memcached --namespace=openstack --values=/tmp/memcached.yaml |

||

| − | Name: minikube |

||

| + | Release "memcached" does not exist. Installing it now. |

||

| − | Roles: master |

||

| + | NAME: memcached |

||

| − | Labels: beta.kubernetes.io/arch=amd64 |

||

| + | LAST DEPLOYED: Sun Feb 3 10:30:32 2019 |

||

| − | beta.kubernetes.io/os=linux |

||

| + | NAMESPACE: openstack |

||

| − | ceph-mgr=enabled |

||

| + | STATUS: DEPLOYED |

||

| − | ceph-mon=enabled |

||

| + | |||

| − | ceph-osd=enabled |

||

| + | RESOURCES: |

||

| − | ceph-osd-device-dev-sdb=enabled |

||

| + | ==> v1/ConfigMap |

||

| − | ceph-osd-device-dev-sdc=enabled |

||

| − | + | NAME DATA AGE |

|

| + | memcached-memcached-bin 1 3s |

||

| − | node-role.kubernetes.io/master= |

||

| + | |||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | memcached-memcached 1 3s |

||

| + | |||

| + | ==> v1/Service |

||

| + | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

||

| + | memcached ClusterIP 10.96.106.159 <none> 11211/TCP 3s |

||

| + | |||

| + | ==> v1/Deployment |

||

| + | NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE |

||

| + | memcached-memcached 1 1 1 0 3s |

||

| + | |||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | memcached-netpol application=memcached 3s |

||

| + | |||

| + | ==> v1/Pod(related) |

||

| + | NAME READY STATUS RESTARTS AGE |

||

| + | memcached-memcached-6d48bd48bc-7kd84 0/1 Init:0/1 0 2s |

||

| + | |||

| + | |||

| + | + ./tools/deployment/common/wait-for-pods.sh openstack |

||

| + | + helm status memcached |

||

| + | LAST DEPLOYED: Sun Feb 3 10:30:32 2019 |

||

| + | NAMESPACE: openstack |

||

| + | STATUS: DEPLOYED |

||

| + | |||

| + | RESOURCES: |

||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | memcached-netpol application=memcached 78s |

||

| + | |||

| + | ==> v1/Pod(related) |

||

| + | NAME READY STATUS RESTARTS AGE |

||

| + | memcached-memcached-6d48bd48bc-7kd84 1/1 Running 0 77s |

||

| + | |||

| + | ==> v1/ConfigMap |

||

| + | NAME DATA AGE |

||

| + | memcached-memcached-bin 1 78s |

||

| + | |||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | memcached-memcached 1 78s |

||

| + | |||

| + | ==> v1/Service |

||

| + | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

||

| + | memcached ClusterIP 10.96.106.159 <none> 11211/TCP 78s |

||

| + | |||

| + | ==> v1/Deployment |

||

| + | NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE |

||

| + | memcached-memcached 1 1 1 1 78s |

||

</PRE> |

</PRE> |

||

| − | ==2== |

||

<PRE> |

<PRE> |

||

| + | root@openstack:~/mira/openstack-helm# echo $? |

||

| − | helm install --name=ceph local/ceph |

||

| + | 0 |

||

| − | NAME: ceph |

||

| + | </PRE> |

||

| − | LAST DEPLOYED: Sat Feb 2 14:31:32 2019 |

||

| + | |||

| − | NAMESPACE: default |

||

| + | ====Keystone==== |

||

| + | <PRE> |

||

| + | + ./tools/deployment/common/wait-for-pods.sh openstack |

||

| + | + helm status keystone |

||

| + | LAST DEPLOYED: Sun Feb 3 10:36:19 2019 |

||

| + | NAMESPACE: openstack |

||

STATUS: DEPLOYED |

STATUS: DEPLOYED |

||

RESOURCES: |

RESOURCES: |

||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | keystone-credential-rotate 1 7m20s |

||

| + | keystone-fernet-rotate 1 7m20s |

||

| + | keystone-api 1 7m20s |

||

| + | keystone-bootstrap 1 7m20s |

||

| + | keystone-credential-setup 1 7m19s |

||

| + | keystone-db-init 1 7m19s |

||

| + | keystone-db-sync 1 7m19s |

||

| + | keystone-domain-manage 1 7m19s |

||

| + | keystone-fernet-setup 1 7m19s |

||

| + | keystone-rabbit-init 1 7m19s |

||

| + | keystone-test 1 7m19s |

||

| + | |||

| + | ==> v1beta1/RoleBinding |

||

| + | NAME AGE |

||

| + | keystone-keystone-credential-rotate 7m19s |

||

| + | keystone-credential-rotate 7m19s |

||

| + | keystone-fernet-rotate 7m19s |

||

| + | keystone-keystone-fernet-rotate 7m18s |

||

| + | keystone-keystone-api 7m18s |

||

| + | keystone-keystone-bootstrap 7m18s |

||

| + | keystone-credential-setup 7m18s |

||

| + | keystone-keystone-db-init 7m18s |

||

| + | keystone-keystone-db-sync 7m18s |

||

| + | keystone-keystone-domain-manage 7m18s |

||

| + | keystone-fernet-setup 7m18s |

||

| + | keystone-keystone-rabbit-init 7m18s |

||

| + | keystone-keystone-test 7m18s |

||

| + | |||

==> v1/Service |

==> v1/Service |

||

| − | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

+ | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

| − | + | keystone-api ClusterIP 10.110.158.186 <none> 5000/TCP 7m18s |

|

| − | + | keystone ClusterIP 10.108.1.22 <none> 80/TCP,443/TCP 7m18s |

|

| − | ==> |

+ | ==> v1/Deployment |

| − | NAME DESIRED CURRENT |

+ | NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE |

| − | + | keystone-api 1 1 1 1 7m18s |

|

| − | ==> v1beta1/ |

+ | ==> v1beta1/CronJob |

| − | NAME |

+ | NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE |

| − | + | keystone-credential-rotate 0 0 1 * * False 0 <none> 7m18s |

|

| − | + | keystone-fernet-rotate 0 */12 * * * False 0 <none> 7m18s |

|

| − | ceph-mon-check 1 1 1 0 0s |

||

| − | ceph-rbd-provisioner 2 2 2 0 0s |

||

| − | ceph-rgw 1 1 1 0 0s |

||

| − | ==> |

+ | ==> v1beta1/Ingress |

| − | NAME |

+ | NAME HOSTS ADDRESS PORTS AGE |

| + | keystone keystone,keystone.openstack,keystone.openstack.svc.cluster.local 80 7m18s |

||

| − | ceph-mon-keyring-generator 0/1 0s 0s |

||

| + | |||

| − | ceph-rgw-keyring-generator 0/1 0s 0s |

||

| + | ==> v1beta1/PodDisruptionBudget |

||

| − | ceph-mgr-keyring-generator 0/1 0s 0s |

||

| + | NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE |

||

| − | ceph-osd-keyring-generator 0/1 0s 0s |

||

| − | + | keystone-api 0 N/A 1 7m20s |

|

| + | |||

| − | ceph-namespace-client-key-generator 0/1 0s 0s |

||

| + | ==> v1/Secret |

||

| − | ceph-storage-keys-generator 0/1 0s 0s |

||

| + | NAME TYPE DATA AGE |

||

| + | keystone-etc Opaque 9 7m20s |

||

| + | keystone-credential-keys Opaque 2 7m20s |

||

| + | keystone-db-admin Opaque 1 7m20s |

||

| + | keystone-db-user Opaque 1 7m20s |

||

| + | keystone-fernet-keys Opaque 2 7m20s |

||

| + | keystone-keystone-admin Opaque 8 7m20s |

||

| + | keystone-keystone-test Opaque 8 7m20s |

||

| + | keystone-rabbitmq-admin Opaque 1 7m20s |

||

| + | keystone-rabbitmq-user Opaque 1 7m20s |

||

| + | |||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | keystone-netpol application=keystone 7m18s |

||

==> v1/Pod(related) |

==> v1/Pod(related) |

||

| − | NAME |

+ | NAME READY STATUS RESTARTS AGE |

| − | + | keystone-api-f658f747c-q6w65 1/1 Running 0 7m18s |

|

| − | + | keystone-bootstrap-ds8t5 0/1 Completed 0 7m18s |

|

| − | + | keystone-credential-setup-hrp8t 0/1 Completed 0 7m18s |

|

| − | + | keystone-db-init-dhgf2 0/1 Completed 0 7m18s |

|

| − | + | keystone-db-sync-z8d5d 0/1 Completed 0 7m18s |

|

| − | + | keystone-domain-manage-86b25 0/1 Completed 0 7m18s |

|

| − | + | keystone-fernet-setup-txgc8 0/1 Completed 0 7m18s |

|

| − | + | keystone-rabbit-init-jgkqz 0/1 Completed 0 7m18s |

|

| + | |||

| − | ceph-rgw-keyring-generator-x4vrz 0/1 Pending 0 0s |

||

| + | ==> v1/Job |

||

| − | ceph-mgr-keyring-generator-4tncv 0/1 Pending 0 0s |

||

| − | + | NAME COMPLETIONS DURATION AGE |

|

| − | + | keystone-bootstrap 1/1 7m13s 7m18s |

|

| − | + | keystone-credential-setup 1/1 2m6s 7m18s |

|

| − | + | keystone-db-init 1/1 3m46s 7m18s |

|

| + | keystone-db-sync 1/1 6m11s 7m18s |

||

| + | keystone-domain-manage 1/1 6m51s 7m18s |

||

| + | keystone-fernet-setup 1/1 3m52s 7m18s |

||

| + | keystone-rabbit-init 1/1 5m33s 7m18s |

||

| + | |||

| + | ==> v1/ConfigMap |

||

| + | NAME DATA AGE |

||

| + | keystone-bin 13 7m20s |

||

| + | |||

| + | ==> v1beta1/Role |

||

| + | NAME AGE |

||

| + | keystone-openstack-keystone-credential-rotate 7m19s |

||

| + | keystone-credential-rotate 7m19s |

||

| + | keystone-fernet-rotate 7m19s |

||

| + | keystone-openstack-keystone-fernet-rotate 7m19s |

||

| + | keystone-openstack-keystone-api 7m19s |

||

| + | keystone-openstack-keystone-bootstrap 7m19s |

||

| + | keystone-credential-setup 7m19s |

||

| + | keystone-openstack-keystone-db-init 7m19s |

||

| + | keystone-openstack-keystone-db-sync 7m19s |

||

| + | keystone-openstack-keystone-domain-manage 7m19s |

||

| + | keystone-fernet-setup 7m19s |

||

| + | keystone-openstack-keystone-rabbit-init 7m19s |

||

| + | keystone-openstack-keystone-test 7m19s |

||

| + | |||

| + | |||

| + | + export OS_CLOUD=openstack_helm |

||

| + | + OS_CLOUD=openstack_helm |

||

| + | + sleep 30 |

||

| + | + openstack endpoint list |

||

| + | +----------------------------------+-----------+--------------+--------------+---------+-----------+---------------------------------------------------------+ |

||

| + | | ID | Region | Service Name | Service Type | Enabled | Interface | URL | |

||

| + | +----------------------------------+-----------+--------------+--------------+---------+-----------+---------------------------------------------------------+ |

||

| + | | 0f9f179d90a64e76ac65873826a4851e | RegionOne | keystone | identity | True | internal | http://keystone-api.openstack.svc.cluster.local:5000/v3 | |

||

| + | | 1ea5e2909c574c01bc815b96ba818db3 | RegionOne | keystone | identity | True | public | http://keystone.openstack.svc.cluster.local:80/v3 | |

||

| + | | 32e745bc02af4e5cb20830c83fc626e3 | RegionOne | keystone | identity | True | admin | http://keystone.openstack.svc.cluster.local:80/v3 | |

||

| + | +----------------------------------+-----------+--------------+--------------+---------+-----------+---------------------------------------------------------+ |

||

| + | </PRE> |

||

| + | <PRE> |

||

| + | root@openstack:~/mira/openstack-helm# echo $? |

||

| + | 0 |

||

| + | </PRE> |

||

| + | |||

| + | ====Heat==== |

||

| + | <PRE> |

||

| + | + : |

||

| + | + helm upgrade --install heat ./heat --namespace=openstack --set manifests.network_policy=true |

||

| + | Release "heat" does not exist. Installing it now. |

||

| + | NAME: heat |

||

| + | LAST DEPLOYED: Sun Feb 3 10:57:10 2019 |

||

| + | NAMESPACE: openstack |

||

| + | STATUS: DEPLOYED |

||

| + | |||

| + | RESOURCES: |

||

| + | ==> v1beta1/CronJob |

||

| + | NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE |

||

| + | heat-engine-cleaner */5 * * * * False 0 <none> 4s |

||

==> v1/Secret |

==> v1/Secret |

||

| − | NAME TYPE DATA AGE |

+ | NAME TYPE DATA AGE |

| + | heat-etc Opaque 10 7s |

||

| − | ceph-keystone-user-rgw Opaque 7 1s |

||

| + | heat-db-user Opaque 1 7s |

||

| + | heat-db-admin Opaque 1 7s |

||

| + | heat-keystone-user Opaque 8 7s |

||

| + | heat-keystone-test Opaque 8 7s |

||

| + | heat-keystone-stack-user Opaque 5 7s |

||

| + | heat-keystone-trustee Opaque 8 7s |

||

| + | heat-keystone-admin Opaque 8 7s |

||

| + | heat-rabbitmq-admin Opaque 1 7s |

||

| + | heat-rabbitmq-user Opaque 1 7s |

||

| + | |||

| + | ==> v1/ServiceAccount |

||

| + | NAME SECRETS AGE |

||

| + | heat-engine-cleaner 1 7s |

||

| + | heat-api 1 7s |

||

| + | heat-cfn 1 7s |

||

| + | heat-engine 1 7s |

||

| + | heat-bootstrap 1 6s |

||

| + | heat-db-init 1 6s |

||

| + | heat-db-sync 1 6s |

||

| + | heat-ks-endpoints 1 6s |

||

| + | heat-ks-service 1 6s |

||

| + | heat-ks-user-domain 1 6s |

||

| + | heat-trustee-ks-user 1 6s |

||

| + | heat-ks-user 1 6s |

||

| + | heat-rabbit-init 1 6s |

||

| + | heat-trusts 1 6s |

||

| + | heat-test 1 6s |

||

| + | |||

| + | ==> v1beta1/RoleBinding |

||

| + | NAME AGE |

||

| + | heat-heat-engine-cleaner 5s |

||

| + | heat-heat-api 5s |

||

| + | heat-heat-cfn 5s |

||

| + | heat-heat-engine 5s |

||

| + | heat-heat-db-init 5s |

||

| + | heat-heat-db-sync 5s |

||

| + | heat-heat-ks-endpoints 5s |

||

| + | heat-heat-ks-service 5s |

||

| + | heat-heat-ks-user-domain 5s |

||

| + | heat-heat-trustee-ks-user 5s |

||

| + | heat-heat-ks-user 5s |

||

| + | heat-heat-rabbit-init 5s |

||

| + | heat-heat-trusts 5s |

||

| + | heat-heat-test 5s |

||

| + | |||

| + | ==> v1/Service |

||

| + | NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE |

||

| + | heat-api ClusterIP 10.107.126.110 <none> 8004/TCP 5s |

||

| + | heat-cfn ClusterIP 10.103.165.157 <none> 8000/TCP 5s |

||

| + | heat ClusterIP 10.106.167.63 <none> 80/TCP,443/TCP 5s |

||

| + | cloudformation ClusterIP 10.107.173.42 <none> 80/TCP,443/TCP 5s |

||

| + | |||

| + | ==> v1/Deployment |

||

| + | NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE |

||

| + | heat-api 1 1 1 0 5s |

||

| + | heat-cfn 1 1 1 0 5s |

||

| + | heat-engine 1 1 1 0 5s |

||

| + | |||

| + | ==> v1/NetworkPolicy |

||

| + | NAME POD-SELECTOR AGE |

||

| + | heat-netpol application=heat 4s |

||

| + | |||

| + | ==> v1/Pod(related) |

||

| + | NAME READY STATUS RESTARTS AGE |

||

| + | heat-api-69db75bb6d-h24w9 0/1 Init:0/1 0 5s |

||

| + | heat-cfn-86896f7466-n5dnz 0/1 Init:0/1 0 5s |

||

| + | heat-engine-6756c84fdd-44hzf 0/1 Init:0/1 0 5s |

||

| + | heat-bootstrap-v9642 0/1 Init:0/1 0 5s |

||

| + | heat-db-init-lfrsb 0/1 Pending 0 5s |

||

| + | heat-db-sync-wct2x 0/1 Init:0/1 0 5s |

||

| + | heat-ks-endpoints-wxjwp 0/6 Pending 0 5s |

||

| + | heat-ks-service-v95sk 0/2 Pending 0 5s |

||

| + | heat-domain-ks-user-4fg65 0/1 Pending 0 4s |

||

| + | heat-trustee-ks-user-mwrf5 0/1 Pending 0 4s |

||

| + | heat-ks-user-z6xhb 0/1 Pending 0 4s |

||

| + | heat-rabbit-init-77nzb 0/1 Pending 0 4s |

||

| + | heat-trusts-7x7nt 0/1 Pending 0 4s |

||

| + | |||

| + | ==> v1beta1/PodDisruptionBudget |

||

| + | NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE |

||

| + | heat-api 0 N/A 0 7s |

||

| + | heat-cfn 0 N/A 0 7s |

||

==> v1/ConfigMap |

==> v1/ConfigMap |

||

| − | NAME |

+ | NAME DATA AGE |

| − | + | heat-bin 16 7s |

|

| − | ceph-bin 26 1s |

||

| − | ceph-etc 1 1s |

||

| − | ceph-templates 5 1s |

||

| − | ==> |

+ | ==> v1beta1/Role |

| − | NAME |

+ | NAME AGE |

| + | heat-openstack-heat-engine-cleaner 6s |

||

| − | general ceph.com/rbd 1s |

||

| + | heat-openstack-heat-api 6s |

||

| + | heat-openstack-heat-cfn 6s |

||

| + | heat-openstack-heat-engine 6s |

||

| + | heat-openstack-heat-db-init 6s |

||

| + | heat-openstack-heat-db-sync 6s |

||

| + | heat-openstack-heat-ks-endpoints 6s |

||

| + | heat-openstack-heat-ks-service 6s |

||

| + | heat-openstack-heat-ks-user-domain 6s |

||

| + | heat-openstack-heat-trustee-ks-user 6s |

||

| + | heat-openstack-heat-ks-user 6s |

||

| + | heat-openstack-heat-rabbit-init 6s |

||

| + | heat-openstack-heat-trusts 5s |

||

| + | heat-openstack-heat-test 5s |

||

| + | |||

| + | ==> v1/Job |

||

| + | NAME COMPLETIONS DURATION AGE |

||

| + | heat-bootstrap 0/1 5s 5s |

||

| + | heat-db-init 0/1 4s 5s |

||

| + | heat-db-sync 0/1 5s 5s |

||

| + | heat-ks-endpoints 0/1 4s 5s |

||

| + | heat-ks-service 0/1 4s 5s |

||

| + | heat-domain-ks-user 0/1 4s 4s |

||

| + | heat-trustee-ks-user 0/1 4s 4s |

||

| + | heat-ks-user 0/1 4s 4s |

||

| + | heat-rabbit-init 0/1 4s 4s |

||

| + | heat-trusts 0/1 4s 4s |

||

| + | |||

| + | ==> v1beta1/Ingress |

||

| + | NAME HOSTS ADDRESS PORTS AGE |

||

| + | heat heat,heat.openstack,heat.openstack.svc.cluster.local 80 4s |

||

| + | cloudformation cloudformation,cloudformation.openstack,cloudformation.openstack.svc.cluster.local 80 4s |

||

| + | |||

| + | |||

| + | + ./tools/deployment/common/wait-for-pods.sh openstack |

||

| + | + export OS_CLOUD=openstack_helm |

||

| + | + OS_CLOUD=openstack_helm |

||

| + | + openstack service list |

||

| + | +----------------------------------+----------+----------------+ |

||

| + | | ID | Name | Type | |

||

| + | +----------------------------------+----------+----------------+ |

||

| + | | 5c354b75377944888ac1cc9a3a088808 | heat | orchestration | |

||

| + | | a55578c3ca8948d28055511c6a2e59bc | heat-cfn | cloudformation | |

||

| + | | fa7df0be3e99442d8fe42bda7519072f | keystone | identity | |

||

| + | +----------------------------------+----------+----------------+ |

||

| + | + sleep 30 |

||

| + | + openstack orchestration service list |

||